Key takeaways

- Best of both worlds: Databricks has become the data platform of choice in many organizations, and Power BI is a meaningful way to visualize and report on Databricks data.

- There are many options for integrating Databricks with Power BI: Multiple integration approaches exist between Databricks and Power BI, each tailored to different scenarios, skill levels, and organizational needs. These include options from within Databricks itself to ways to connect to Databricks from Power BI.

- Tabular Editor helps facilitate Databricks integration with Power BI: Tabular Editor enables DevOps workflows, version control, and team collaboration for complex models built on Databricks data. You can also use Tabular Editor to automatically convert metric views to Power BI semantic models.

This summary is produced by the author, and not by AI.

NOTE

Update January 2026: As of January 2026, Tabular Editor now has a feature called the Semantic Bridge which facilitates automated translation of Databricks metric views to Power BI semantic models. You can read more about this feature at the end of this article, or in our release blog and documentation.

Integrating Databricks and Power BI

Databricks has become a popular data platform that many organizations adopt. One of the more meaningful ways to leverage the data in Databricks is through visualization and reporting in Power BI. But how can we do this most effectively?

Integration between Databricks and Power BI has significantly evolved over time. As a result, multiple integration patterns have emerged, each tailored to potentially different scenarios and use cases. This article provides an overview of some options to integrate Power BI with Databricks, with focus on building robust semantic models directly on the Databricks Lakehouse that you can use for reporting, dashboards, and querying data with AI experiences.

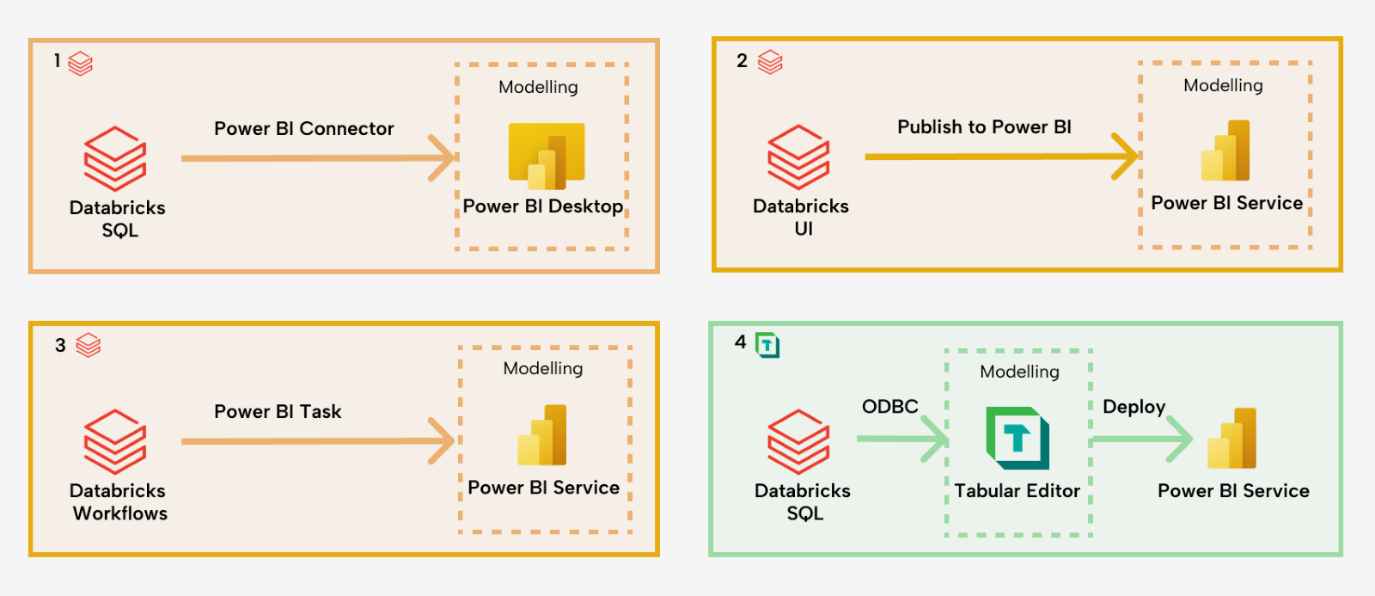

The diagram shows the following approaches:

- Databricks SQL and Power BI Desktop.

- Publish to Power BI Service from Databricks, directly.

- Power BI task in Databricks workflows.

- The Databricks connector in Tabular Editor.

- Metric views in Databricks converted to Power BI semantic models by Tabular Editor.

We explain these approaches in the rest of the article.

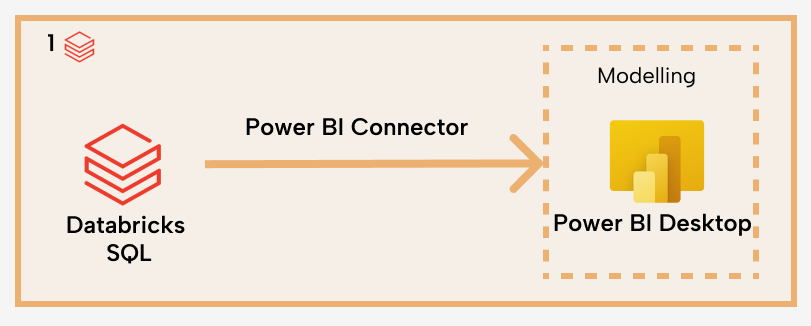

Option 1: Databricks SQL + Power BI Desktop

Most users begin their Power BI development journey with Power BI Desktop, which remains the most common starting point for building semantic models. A few years ago, Databricks introduced Databricks SQL that is designated to run BI workloads. If you're still using interactive clusters to connect Power BI to Databricks, switching to Databricks SQL warehouses can offer significant performance gains.

This pattern is a great starting point because Power BI Desktop is easy to use and widely adopted, and Databricks SQL warehouses provide scalability, and are optimized for BI use cases.

Despite its popularity, this approach has a few limitations: Firstly, except for configuring incremental refresh, Power BI Desktop does not support custom partitions in semantic models. Custom partitions are a technique for dividing tables into smaller, more manageable segments. This can be a limitation for large models, and it has several benefits, such as:

- Faster refreshes: Partitioning can let you refresh multiple parts of a table at the same time. This allows parallel refresh (e.g., configuring MaxParallelismPerQuery), which can drastically reduce refresh duration.

- Better resource management: You can leverage partitions for more efficient queries and manage these partitions separately, refreshing only the partitions that you need to, rather than the entire table.

It is important not to confuse the partition in Databricks vs the partitioning in Power BI. Partitioning in Power BI happens in the semantic model, while partitioning in Databricks happens in the Lakehouse. This is partitioning Delta tables, the need of which has been replaced by liquid clustering. Liquid clustering can boost Power BI query performance when the cluster keys are common query predicates. Liquid clustering is to Databricks as v-ordering is to Fabric.

In summary, approach one involves creating and managing your semantic model from Power BI Desktop. While straightforward to get started, there are several limitations.Power BI Desktop is not available for macOS, excluding Mac users from semantic model development. Furthermore, to unlock other advanced modelling capabilities, tools like Tabular Editor are needed (to be covered later in this article).

Pros:

- Easy to get started with Power BI desktop, so adoption threshold is low. This pattern can serve as a starting point for your semantic modelling journey.

Cons:

- No custom partitions, not suitable for partitioning use cases beyond simply time-based incremental refresh. This is especially important for developers working on large semantic models to consider.

- Mac users will need to do modelling via a Windows virtual machine to use Power BI Desktop.

- Can be limited and/or slower for complex modelling tasks, so this option is not suitable for experienced developers, or those who are looking for automation and advanced modelling features.

Best for:

Desktop developers with simple/moderate modelling needs and no requirement for advanced partitioning or automation.

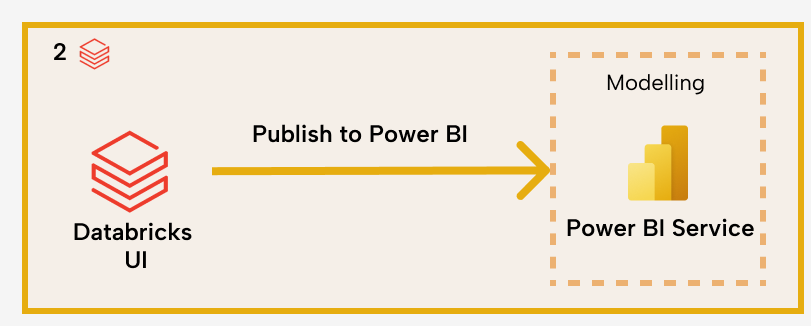

Option 2: Publish to Power BI Service from Databricks, directly

Databricks introduced Publish to Power BI Service last year – a web-based integration that connects a Databricks workspace to Power BI using Microsoft Entra ID. This feature allows users to publish a table or an entire schema directly from Databricks to a Power BI workspace, enabling quick data access in Power BI without needing Power BI Desktop.

All modelling is performed in the Power BI Service web UI, which makes it accessible to Mac users and ideal for ad hoc data exploration. However, this feature is best suited for lightweight scenarios, as it currently lacks API support, which means you cannot use this functionality by calling a REST API endpoint. In addition, the modelling capabilities are limited compared to what’s available in Power BI Desktop or external tools like Tabular available in Power BI Desktop or external tools like Tabular Editor.

Pros:

- Click and drop, easy to get started with Databricks UI and Power BI Web UI. Once the connectivity between the two services is configured, the deployment only takes a few clicks.

- Since the solution is web-based, Mac users have the same user experience as Windows users.

- Good for data exploration of Databricks data in Power BI. If your use case is simply bringing some data from Databricks into Power BI and doing some quick ad hoc analysis, this is a great option.

Cons:

- Modelling capabilities are very limited since Power BI Web UI has limited modelling capabilities. Thus, this is not suitable for use cases that requirements more than basic modelling tasks.

- No API support means operationalization of data modelling done through this feature is not possible. As a result, this is meant more for one-off exploration than for production data pipelines.

Best for:

SQL analysts doing ad hoc data exploration

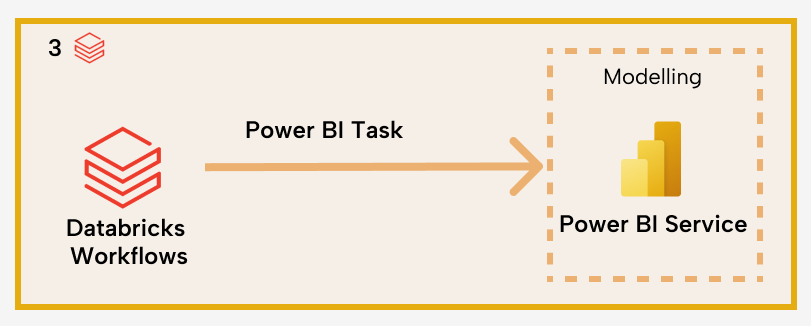

Option 3: Power BI task in Databricks workflows

Announced in Q1 2025, the Power BI task in Databricks Workflows is an evolution of the "Publish to Power BI Service" feature. This enhancement allows Power BI to be configured as a task within Databricks workflows, enabling users to publish, update, and refresh Power BI semantic models in a fully orchestrated and automated way.

The feature supports DirectQuery, Import, and Composite modes, and is available with Databricks Jobs API and Databricks Asset Bundles, making it well-suited for DevOps and CI/CD pipelines. While powerful for automation, it’s important to note that the feature is still in preview and does not include modelling capabilities—modelling must be performed in the Power BI Service Web UI, similar to the previous Publish to Power BI feature.

Pros:

- Databricks Workflow built-in feature that comes with Job API and DABs support, which means you can extend your DevOps pipeline from just data engineering in Power BI to include the publishing of semantic model in Power BI

- Possible to chain ingestion, data engineer, and publishing Power BI semantic models in a single pipeline in an orchestrated manner.

Cons:

- Like the previous pattern, modelling capabilities are very limited given Power BI Web UI’s lack of modelling capabilities. This is not suitable for developers that need to do some development on the semantic modellings beyond the basic tasks.

- This feature was announced in FabCon 2025, still in Public Preview. Organizations that cannot adopt preview features based on company policy will need to wait until it goes to GA.

Best for:

Data engineers owning semantic layer with very simple modelling needs.

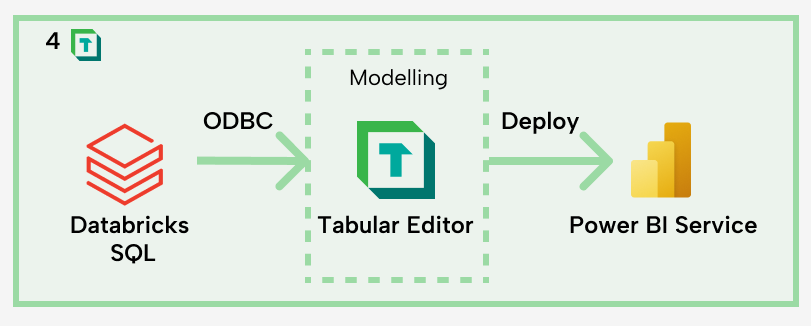

Option 4: Databricks connector in Tabular Editor

This pattern stands apart from the others by introducing an external tool—Tabular Editor—into the architecture. Tabular Editor serves as a powerful bridge between Databricks and Power BI, enabling both advanced semantic modelling and DevOps workflows. With a native Databricks connector, Tabular Editor supports complex modelling scenarios and full automation of tasks such as deployment, version control, and scripting.

This approach is ideal for teams looking to optimize and automate the full lifecycle of Power BI semantic models. However, it introduces additional tooling complexity, and since Tabular Editor is Windows-based, it excludes Mac users like Power BI Desktop (discussed earlier in approach one).

NOTE

To dive deeper into modelling and automation features, visit Tabular Editor Learn. For DevOps integration patterns between Databricks and Power BI using Tabular Editor, check out the recording of the session by Liping Huang and Marius Panga at SQLBits 2025: "Bridging DevOps Across Power BI and Databricks with Tabular Editor".

Pros:

- Offers more advanced features for managing complex models, which means you can build better models faster using Tabular Editor.

- Advanced scripting and task automation for data modelling, which can help you improve productivity when working with semantic models.

- Enables version control and parallel development for deployment, which makes team collaboration possible when working on the same semantic models.

Cons:

- Requires some familiarity with Tabular Editor and Power BI modelling concepts. If you are just getting started with Power BI and want to learn the basic of Tabular Editor, can check out this beginner course from Tabular Editor Learn.

- Requires setting up Tabular Editor and introducing one more component in the End-to-End Development. More components in the End-to-End pipeline means more complexity to manage, and you will need to set up the DevOps pipeline properly to orchstrate the End-to-End process.

Best for:

Advanced modellers who have needs for end-to-end DevOps, team collaboration, model optimization, and/or batch task automation.

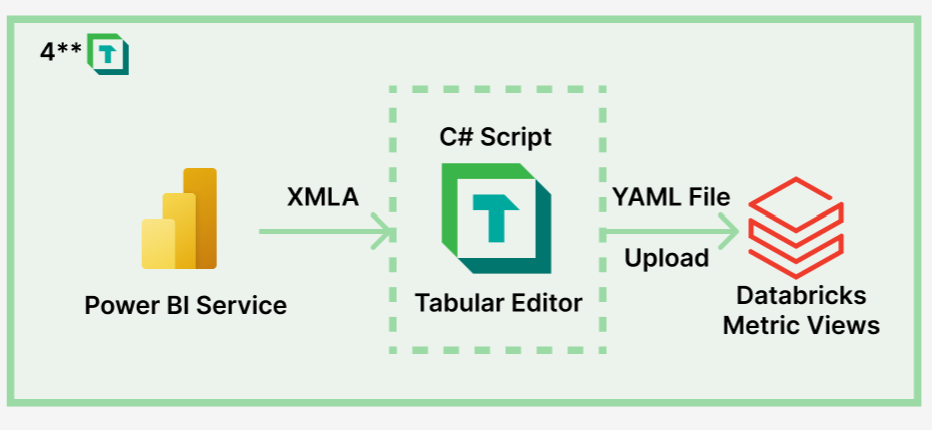

Option 5: Converting Unity Catalog Metric Views to Power BI semantic models by using Tabular Editor

Databricks introduced Unity Catalog Metric Views in 2025, letting users build semantic layers in Databricks using YAML definitions. In January 2026, Tabular Editor 3 released Semantic Bridge (Available in Enterprise Edition), which allows users translate Metric Views defined in YAML files into semantic model objects in Tabular Editor. From there, you can further develop the model, test and validate and then deploy the semantic model to Power BI.

In addition, if you have the need to reuse business logic defined in Power BI semantic models in Databricks in ML/AI pipelines or other use cases, you can use Semantic Bridge to write changes in Power BI semantic models back to the Metric Views YAML definition files using C# scripts, then either manually or programmatically upload the YAML file to Databricks to update Metric Views.

The introduction of Semantic Bridge is attended to address the lack of native integration between Unity Catalog Metric Views and Power BI.

Pros:

- Allows you to centralize business logic in metric views, while still leveraging the semantic model and reporting features of Power BI. Metric views can also be programmatically modified using C# scripts in Tabular Editor.

- Helps avoid manually building both metric views and Power BI semantic models with duplicated logic.

- Helps you translate metric views to semantic models for scenarios like migration.

Cons:

- At the time of the release in January 2026, Semantic Bridge is an MVP and has certain limitations, such as the translation (both ways) happens through the YAML definition file instead of Metric Views in Databricks instance directly.

- Not all semantic model objects or calculations are automatically translated, so additional effort by developers or AI agents might be required.

For full limitations, and details on how Semantic Bridge works, please see our release blog and documentation.

Further reading

- Tabular Editor x Databricks (Part 1) - This article introduces the blog series and walks through the setup requirements for working with Tabular Editor and Databricks. Read this first to get oriented with the SpaceParts dataset used throughout the series.

- Tabular Editor x Databricks (Part 2) - This article explains the Databricks platform, covering lakehouse architecture and how it combines data warehouse reliability with data lake flexibility. Read this to understand the fundamentals of Databricks as a data source for Power BI.

- Tabular Editor x Databricks (Part 3) - This article covers data exploration techniques using Unity Catalog and the Databricks SQL Editor. Read this to learn how to navigate and understand your data structures before building semantic models.

- Tabular Editor x Databricks (Part 4) - This article walks through configuring SQL Warehouses and installing the Simba Spark ODBC driver needed for Tabular Editor connectivity. Read this for the essential infrastructure setup steps before you start building models.

- Tabular Editor x Databricks (Part 5) - This article covers importing tables from Databricks into a semantic model, including C# scripts for friendly naming, automatic relationships, and metadata import from Unity Catalog. Read this for practical automation techniques and deployment guidance using service principals.

In conclusion

In summary, there are several different approaches to set up a semantic model that uses data from Databricks:

- Databricks SQL and Power BI Desktop: With this approach, users connect to Databricks SQL from Power BI Desktop, where they create and manage their semantic model from the Windows application. While simple, this approach has limitations that can inhibit enterprise organizations with large models.

- Publish to Power BI Service from Databricks, directly: With this approach, you create the semantic model by publishing tables from the Databricks UI to Power BI Service. Then, you build the model in the web. This approach can be suitable when users have Mac OS or simple modelling requirements, but the web modelling experience does not offer the same features or utility as alternatives.

- Power BI task in Databricks workflows: With this approach, you can streamline and automate semantic model publishing from Databricks using Databricks workflows, but you still need to build the model in the Power BI service via the web. You can leverage more advanced CI/CD, but have the same limitations as Approach 2.

- Databricks connector in Tabular Editor: With this approach, you use Tabular Editor to connect to Databricks and build/manage your semantic model, which you deploy to Power BI. Here, you can leverage all the productivity enhancements of Tabular Editor to ensure that your semantic model is tuned to perfection. However, developers need to know how to use Tabular Editor and deploy/manage models via XMLA read/write endpoints.

- Converting metric views to Power BI semantic models by using Tabular Editor: In addition, Tabular Editor helps the translation between Unity Catalog Metric Views and Power BI semantic models with the Semantic Bridge.

Choosing the right approach depends on your scenario and needs!