Key takeaways

- In this article, we discuss examples of MCP servers in BI tools and consider the practical implications for developers, users, and enterprise teams that manage BI environments.

- With Model Context Protocol (MCP) servers, you can leverage the strengths of LLMs with specialized tools to not only write code but also to interact with your reports, models, data, and files.

- It’s likely that more BI tools will use MCP servers in the future to facilitate more useful and effective conversational BI experiences for their users.

- To prepare for MCP servers, enterprise teams should review their current API usage, consider key elements that should be determined beforehand, and pay attention to their current backup and recovery setup.

Most BI developers have experimented with AI for Power BI, be it copy/pasting code from ChatGPT, generating report background images, or using web search to research a technical topic for your project. While few might outright deny that AI can and will eventually transform the way we work with and use data, the current gap between that promise and people copy/pasting questionable code is… rather large.

But what if you could leverage the strengths of large language models (LLMs) with specialized tools to interact with your reports, models, data, and files? What if you could use AI not just to suggest or write code, but to delegate any feasible task – from governance and administration down to bulk-formatting of visuals in a dashboard?

This is not speculation; it's now fully possible with the model context protocol – specifically, with MCP servers. If you want a demonstration, I’ve already created a few short videos showing how you use MCP servers to query a semantic model and control Power BI and Fabric.

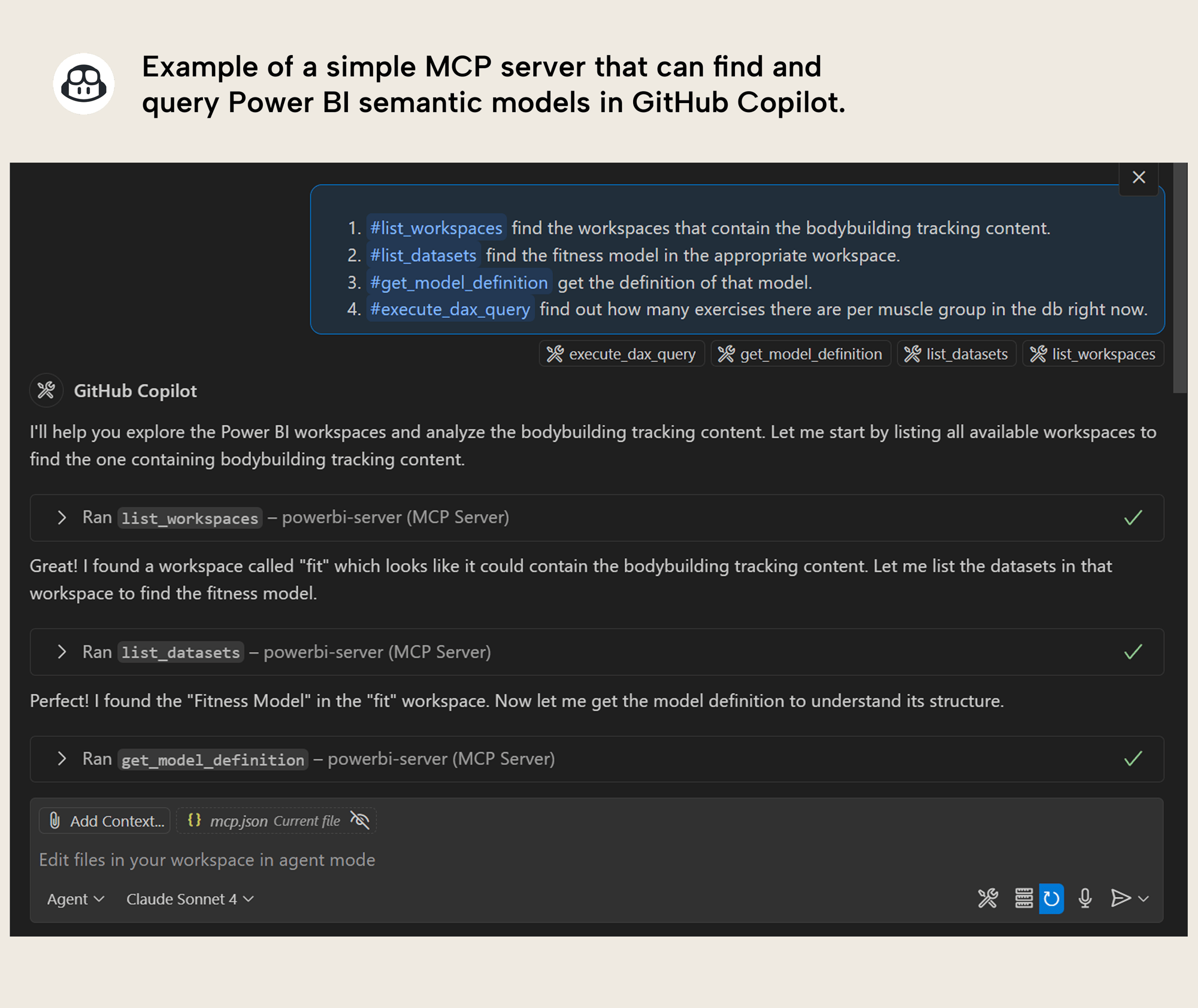

Here’s an example from GitHub Copilot:

In this short article, we want to point to some examples of MCP servers in BI tools and consider the practical implications for developers, users, and enterprise teams that manage BI environments. At Tabular Editor, we've been investing heavily in investigating MCP servers for BI and beyond, and we’ve prepared a great deal of articles on the topic. We're also collaborating with SQLBI for several articles and videos about the topic that will go deeper into the practical details on what MCP servers are how they might impact Power BI, specifically.

Later in this article, we’ll speculate a bit about how MCP servers could change BI tools in various ways.

Explaining MCP servers in simple terms for business intelligence professionals

If you look up the model context protocol, you will find an overwhelming amount of content that explains it as a “USB-C port for AI applications and agents”. This can be a helpful analogy, but it’s confusing unless you understand it in a broader, simpler, and more pragmatic context.

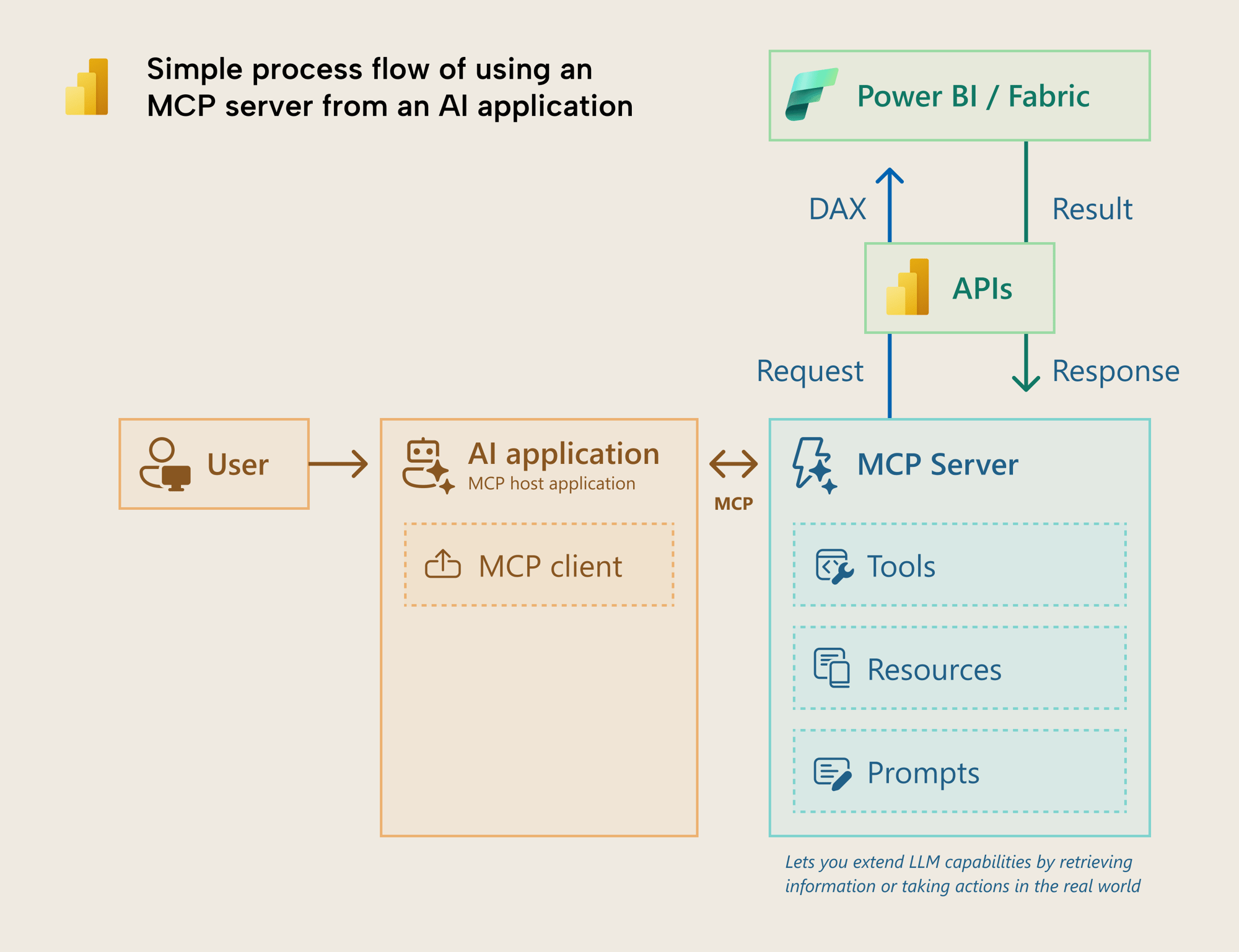

Here’s a simple process flow that shows use of an MCP server to query a Power BI semantic model by using DAX:

The user uses an AI application like Claude Desktop or GitHub Copilot in VS Code. They can integrate an MCP server that they made and run locally, which connects to the application by using the model context protocol. This is the “plugging in of the USB-C”. Then, they can ask the LLM a data question, and it uses a tool in the MCP server to send a DAX query to a semantic model, and retrieve the results, which it uses to answer the user.

Let’s extend this further. Consider the following simple scenario:

Let’s say that you build a semantic model and several dashboards that use it. However, you have several challenges:

- You and your users want to interact with and explore the data by using natural language; you want to “talk with your data” in niche or ad hoc scenarios, and do something with that data like creating visuals, diagrams, or summaries. This is called conversational BI, however, your tool does not support that out-of-the-box with your current licenses.

- It's difficult to make changes in bulk to the dashboards, and renaming or removing fields in the semantic model often results in breaking changes to visuals.

- You want to review information ad hoc about the dashboard usage, provision access to new users, and refresh the underlying semantic model or its tables, all within a single development environment – like one you’re using to develop semantic models.

To help you address these challenges, you could use one or more MCP servers for your BI tool. These servers could be custom-made by yourself, or they could be official first-party servers.

- One server could facilitate read-only conversational BI by allowing you to discover and query semantic models or even underlying data sources like a data warehouse or lakehouse. The server has tools to use APIs and the LLM can generate queries sent by the APIs to retrieve information back.

- Another server could facilitate read/write BI development by allowing you to make bulk changes to both models and dashboards. You could ask the AI assistant to make changes to models and reports, simultaneously, and then provide links when it's done for manual validation.

- A third server could facilitate governance and administration tasks for privileged roles, providing easy access to supporting information and operations by just asking the AI assistant, with detailed logging and controls to prevent unintentional or destructive operations.

In this context, you could use an application that facilitates interaction with an AI assistant via a chat interface. Examples include Claude Desktop, VS Code, and Cursor. If the application supports the model context protocol (in other words, it's an MCP host and has MCP clients) then you can integrate with MCP servers. MCP servers provide the LLM (your AI assistant) with special tools and resources to extend its functionality.

In this example, you could integrate with MCP servers for your BI tool that you run locally, or MCP servers that the first-party vendor provides, remotely. Once you've set up authentication appropriately, you can then use these MCP servers just by making a request or asking a question in natural language to the AI assistant.

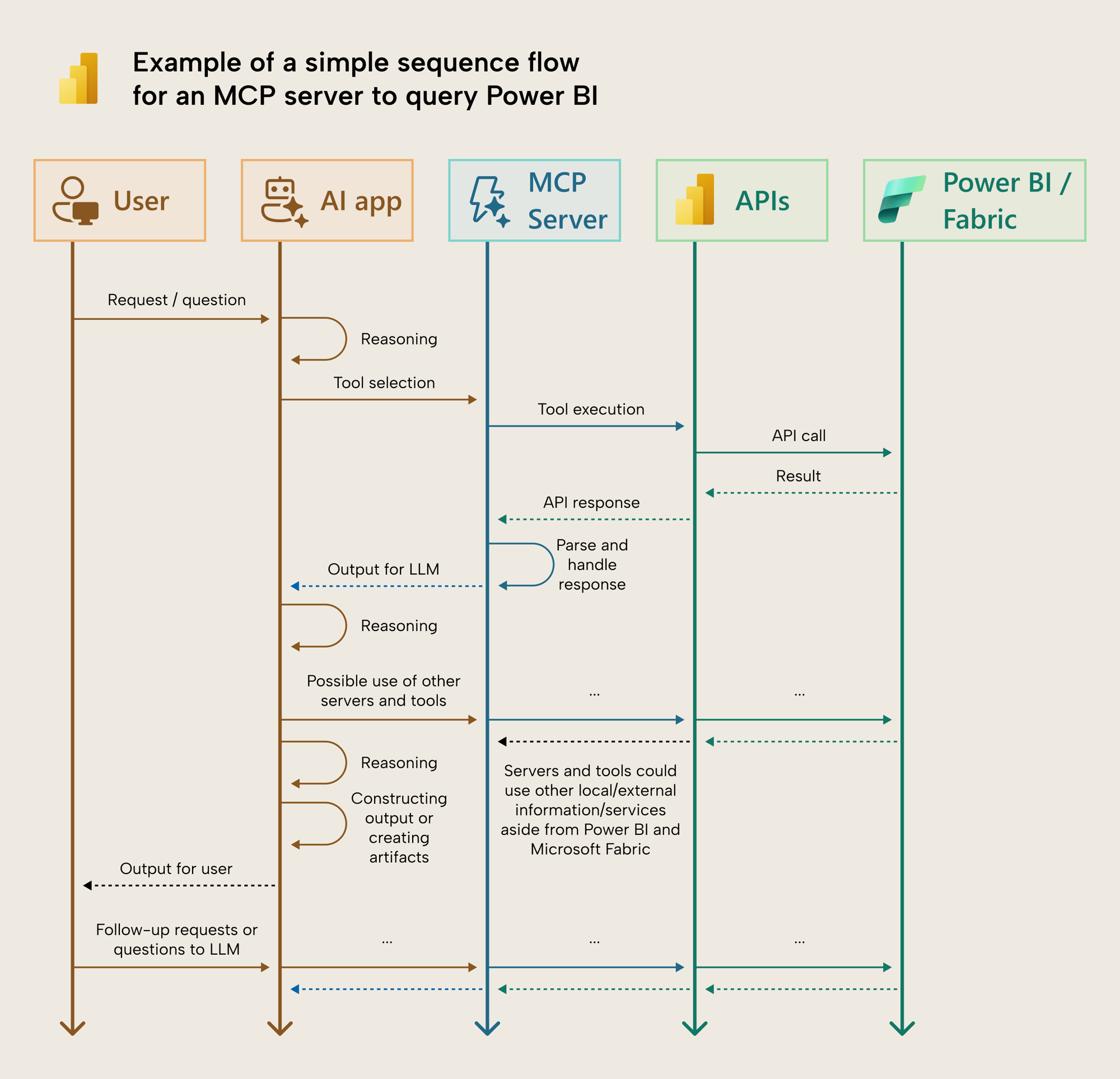

The following sequence diagram shows an example of what this can look like:

Of course, the example above is very simple (and perhaps abstract), but it helps you understand—at a high-level—what the sequence of events are when you would use an MCP server. In reality, this is more complex, and involves elements like authentication, other servers, context, and what information is being sent to the LLM (which is especially important for data privacy and security concerns).

So, returning to the metaphor, if the model context protocol is USB-C for your data, then you're using it to “plug in” aspects of your BI tool that you specify in the MCP server. You can use the MCP server not only to retrieve information (data and metadata) from your BI tool, such as for conversational BI, but to also facilitate the LLM to take actions. The tools in an MCP server give your AI application the ability to turn your text instructions into actions, which makes it an agent.

This is so powerful because you're not bound to just one MCP server for one (BI) platform or tool. You can use and combine multiple MCP servers to work in tandem, creating flows that handle information and actions across end-to-end workflows. This can include MCP servers to work with your local files, communication systems, online information, and external systems.

The potential – and risks – are clearly very high. This is an area that is evolving not just quickly, but so fast it gives you whiplash. So, we’d like to offer some thoughts and speculations on where this might take BI tools. MCP servers are quite an exciting development, but it's also important to reflect openly and practically about what this might mean for BI professionals and organizations, alike.

These next sections represent some opinions and thoughts of the author, so please do interpret them critically, and with a grain of salt.

How might MCP servers change BI tools in general?

It's likely that we'll see BI tools use MCPs to facilitate more useful and effective conversational BI experiences by leveraging MCP servers. First, BI tools will likely offer official MCP servers which provide read-only access to various APIs to retrieve data and metadata. Tableau has already released an official MCP server with some (very) basic functionality, as well as the Real-Time Intelligence workload in Microsoft Fabric (discussed in this blog). Undoubtedly, more official MCP servers will follow for Power BI, Fabric, and other BI tools, which speculatively would probably wrap around existing APIs.

However, these initial first-party MCP servers will likely not cater to all needs. Rather, it's more likely that these initial servers will be impressive and useful, but still too broad and aspecific to live up to expectations and practical use-cases from early-adopters. MCP servers also don’t “just work” – you can’t just plug in an MCP server for the APIs of your BI tool and be able to find or do anything. For good results, the development of tools and resources in a server requires a balance between a variety of complex factors that go beyond the scope of this article. For that reason, there's likely to be an explosion of other, open source, and third-party MCP servers that arise.

Communities around these BI tools will likely lead a surge in open source and third-party MCP servers that offer custom or specialized functionality to solve specific problems. Already, there's a variety of public Power BI MCP servers that you can find on GitHub (we don’t link them here, because we can’t guarantee the safety and security of these servers). It's safe to assume that this will amplify exponentially over the coming weeks (yes, weeks), months, and years.

Aside from open-source MCP servers, people can also make and run their own, locally. Ambitious and tech-savvy individuals can quite quickly build custom MCP servers tailored to their specific needs regarding their own organizational and data challenges. Specific servers built by teams and organizations to address their specific needs may emerge as a good strategy to amplify success – particularly with respect to model memory and knowledge management (a topic we will cover in a later article).

Furthermore, as AI code-assistant tools evolve, creating MCP servers will only become easier. AI tools will reduce the technical barrier and time or effort required to make such a server. This is still probably not something that a self-service business user will do, of course, but this is likely to become a common scenario among decentralized analytics teams. So, this “citizen MCP” scenario is not all that farfetched to think about (and prepare for).

Currently, MCP servers also can't be leveraged from within any BI tools, today. To reiterate, to use an MCP server, you use an AI application that supports the MCP protocol, like Claude Desktop or VS Code (or a command line application like Claude Code). These are both desktop applications, but there are also command-line, web, and mobile applications, too.

These AI applications are powerful enough now to already challenge traditional BI tools in some areas in the near future, particularly with the right mix of MCP servers. However, some (like Claude Desktop) are still maturing and unlikely to be welcomed by enterprise IT teams with open arms for business users. Others (like VS Code) are too intimidating and complex for an average business user to use effectively. So, we might expect to see existing tools in and around the BI space start to support the model context protocol from their existing conversational BI interfaces. So we might expect to see MCP support in the BI space in other tools sooner rather than later… including all of your favorites 😊

This poses some interesting problems, however, since existing desktop MCP host applications currently place few – if any – limitations on what MCP servers you can use, leaving that responsibility to the user. This is not exactly an enterprise-friendly situation, so new controls, governance, and guidance will need to be put in place to prevent users from questionable or malicious use of servers and tools. Likely (hopefully), BI vendors will put some process in place to centrally manage and restrict what servers and tools are used by whom. The simplest scenario would be that vendors limit the usage to only their own, first-party MCP servers and the tools available therein.

There are other challenges to consider, too, because control will need to be not only by server, but also by tool. Users could mistakenly or unintentionally (i.e. due to LLM hallucination or misinterpretation) take destructive actions with agents, so their use needs to be well-governed, and users need to be well-educated about responsible use, themselves. For this reason, it's likely that initial implementations will restrict servers and tools to those that are read-only… at least at first.

This is all speculation, of course. The area is evolving incredibly quickly, with both users and vendors rushing forward. It's entirely possible that vendors—eager to see higher adoption and sentiment of AI tools and features—rush to deploy MCP servers and clients without the appropriate controls in place. Preview features auto-enabled, seatbelts unbuckled, and all. Well, let’s just call that a hypothetical worst-case scenario, for now. Until the day comes that somebody’s AI agent accidentally causes a multi-million dollar, headline news mistake, that is.

What can enterprise teams do to prepare for MCP servers?

Pandora’s box is already open. These tools are not hypothetical; they’re already out and being used, in the wild. So, if you're in an enterprise BI or IT team, then you probably want to start to think about and prepare for this “MCP avalanche”. There’s no specific MCP server guidance that one can reliably provide, yet, but there are some common-sense steps to consider:

- Ensure that you have a consistent and sustainable practice in place to view, review, and adjust who has access to which content, and what their permissions are.

- Review the tenant settings for API usage and consider reviewing this access to make sure that only trusted individuals and service principals can use the APIs for your (BI) tools.

- Research the various ways that one can authenticate and use the APIs. Nowadays, depending on your environment, there are more options than only app registration.

- Consider a pilot project where key individuals explore the possible benefits and risks for MCP servers in your organization. Explore who could benefit, what’s needed to enable them, and what’s needed to mitigate and prevent risks from becoming threats or issues. Make sure that you do this not just from a security or IT perspective, but also in terms of the different personas and scenarios in your organization. Consider the perspective of not only BI content creators, but also consumers.

- If you do plan to use MCP servers, consider important elements, like which host applications, AI services, LLMs, and MCP servers you might use, and how you can use them securely while respecting organizational security, governance, and data policies.

- Ensure that you pay attention to announcements from your BI tool about new MCP servers or related features. You want to especially pay attention to any capabilities that could be auto-enabled in tools or tenants, and what functionality this provides.

- Pay attention to backup and recovery. No matter how well you define your controls, there are always possibilities of mistakes with new tools and technologies. If there is accidentally a destructive action, you want to be able to restore a prior state.

- Be wary of planning for a too conservative or restrictive approach to try and block these tools, entirely. Remember: If someone wants into the house and the door is locked, they’re going to break a window. Instead take a proactive but measured approach to evaluate and mitigate risk while still seeking opportunities to safely enable people interested in using MCP servers.

How might MCP servers change BI experiences for users?

As we’ve all seen, vendors are determined to provide users conversational BI. With MCP servers, this seems to work better that current implementations. It's likely that MCP servers could lead to the following:

- More conversational BI tools and features, and more adoption across different tools.

- A higher demand for user training and enablement to use conversational BI.

- More interest and pressure to use non-traditional, AI tools for data analysis, especially as their data visualization capabilities (which are already quite good) become more impressive.

- Interest in combining conversational BI with agentic workflows to take data-driven actions. In the short-term, this might be simple things like sending e-mails, messages, or performing some kind of change to data or files. However, over the mid- to long-term, it's plausible that we might see more sophisticated, real-world actions being possible.

It's reasonable to expect that these experiences can—and already are—creating a need for soft skills training among users to best leverage these capabilities, including AI and data literacy, communication skills, and critical thinking.

To quote our colleague Greg Baldini, “Critical thinking and deep understanding of your domain and what your intended outcome is are critical. Otherwise you won't get an intended result, you'll just generate *something*. There's no guarantee that *something* is useful or valuable, even if it is correct.”

How might MCP servers change BI experiences for developers?

In the “vibe coding” era, it's likely that we will see MCP servers become one instrumental part of AI truly augmenting developers to achieve better outcomes faster. Eventually, MCP servers will arise that empower developers to complete significant portions a task or project in natural language. This poses potential benefits for developers of any skill level:

- Novice or less technical developers can use these tools to overcome technical hurdles preventing them from obtaining a particular result. The individuals that stand to benefit the most here are ones who deeply understand the business and their needs, and can articulate these things well. However, the lack of technical knowledge puts these individuals at risk of dealing with errors, hallucinations, or unsustainable outputs, particularly as a project scales in size and complexity.

- Experienced developers can use these tools to minimize trivial or mundane tasks or help them break more complex tasks into workable parts. They will have to pay less “inconvenience tax” to get the results they want but will still need to invest significant time in understanding the business and users who will use the things they make. Experienced developers may also have to spend more time troubleshooting and correcting the “vibe-coded” outputs from others which require human intervention.

Again, we’re speculating about a future which has not yet arrived. Early adoption is one thing, but change – especially in organizations – takes time and effort to execute successfully.

There still exist many unanswered questions (including about governance and security) and risks (such as the caveats of LLMs, like nondeterminism, error by omission, and the full spectrum of hallucinations). Regardless, the potential of MCP servers is quite clear. As are the potential risks. Thus, in an age where things only seem to move faster, it’s good to think pro-actively rather than be caught off-guard by an auto-enabled preview setting and a few ambitious individuals.