Key takeaways

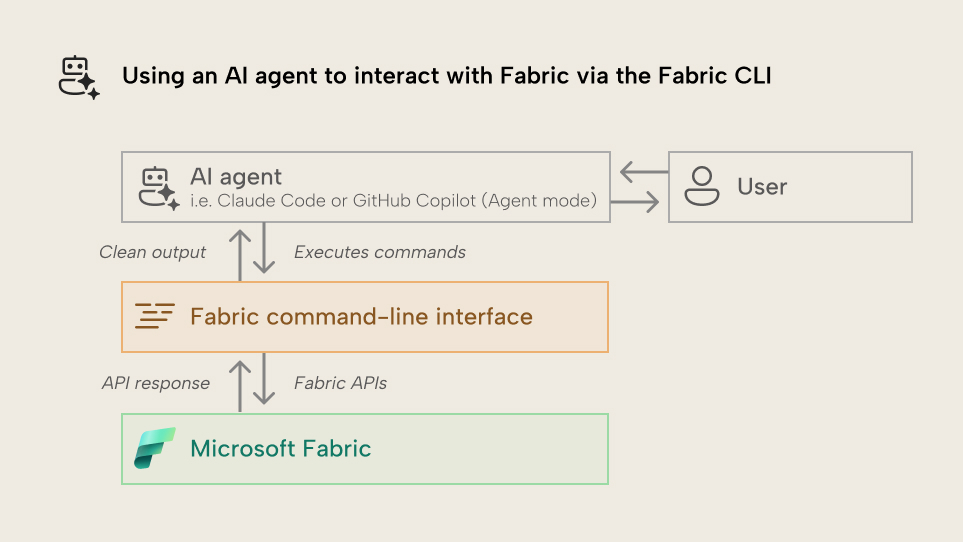

- Using an AI agent to interact with Fabric via the Fabric CLI can be surprisingly effective. But keep in mind that this article only covers supervised agents acting upon instructions from a human.

- To set up an agent, you need access to a coding agent and invest time to establish context for the agent. Without context, the agent won't know how to use the Fabric CLI, as it isn't present in LLM training data.

- An agent using the Fabric CLI can be effective when exploring tenants, workspaces, or items. Once context has been established, the agent can examine available data and create a summary.

- You can also parallelize tasks by using multiple agents at once. This can e.g. help you migrate workspaces.

In a previous article, we introduced the Microsoft Fabric command-line interface (CLI) as a tool to programmatically work with the Fabric data platform. This has many possible applications to facilitate automation and orchestration of certain tasks. It can greatly enhance your productivity and efficiency in many scenarios. Furthermore, unlike other approaches like the Fabric APIs or custom integrations, the Fabric CLI is easy to set up, use, and troubleshoot, even if you're not a technical expert or engineer. It also doesn't require Fabric; you can use the Fabric CLI with Pro and Premium-Per User workspaces, too.

One surprisingly effective way to use the Fabric CLI is to give it to a coding agent, which you can instruct to complete certain tasks. With this approach, the agent uses the Fabric CLI (in combination with other tools) to retrieve information from or take actions in your Fabric environment. This means that you can delegate tasks to the agent or simply use the Fabric CLI to improve its outputs on other tasks (like semantic model development).

When and why is this useful?

The following sections briefly describe two example scenarios where an agent using the Fabric CLI can be convenient, efficient, or just helpful for you.

Scenario one: Exploring tenants, workspaces, or items

The example here involves understanding how many workspaces are on Fabric Trial capacity. As we all know, Fabric Trials have been extending ad infinitum for the last two years, despite constant warnings from Microsoft that the trial will end. However, all good things do eventually come to an end. For governance reasons, it's now time for us to migrate all workspaces in the SpaceParts tenant from Fabric Trial capacities, and end / disable the Fabric trial for our users.

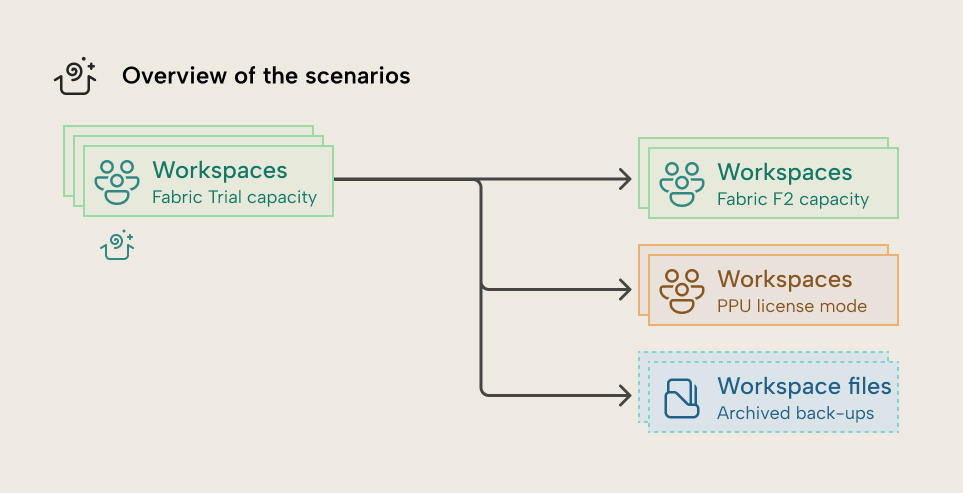

A summary of the scenarios is as follows:

In the SpaceParts tenant, we have many workspaces which are currently on Fabric Trial capacity. These trials are being extended regularly, despite regular warnings from Microsoft that you will lose data if you fail to migrate before the trial ends. As such, SpaceParts self-service users on this tenant don't take these warnings seriously, anymore, and workspaces on Trial capacities are regularly being used for production workloads and business cases. The risk is obvious: if Microsoft ever decides to stop renewing trials, there is a serious threat of lost data and disruption.

Of course, this is a governance problem that could have been prevented. But it's time to act now to prevent future damage. Borp from SpaceParts needs to take inventory of the workspaces and migrate them to a Fabric F2 capacity, Premium-Per User license mode, or archive and delete the many temporary, test workspaces.

First, we need to understand how many workspaces are using the Fabric Trial capacities, what's in these workspaces, and who owns them. Doing this manually would require going through each workspace one-by-one. This would be an absurd waste of time.

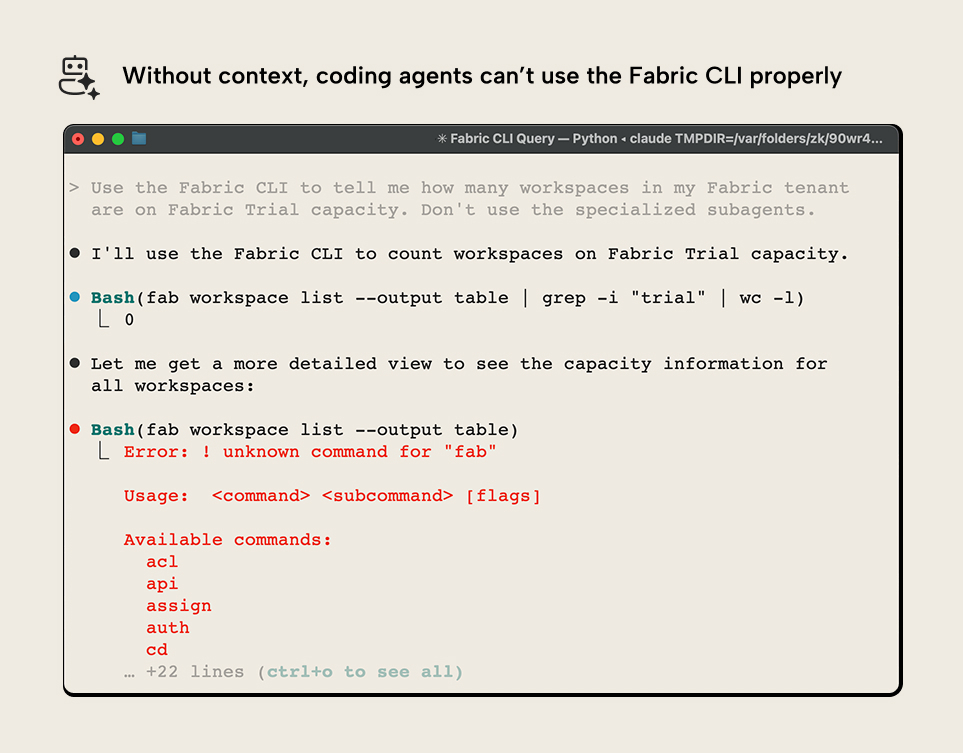

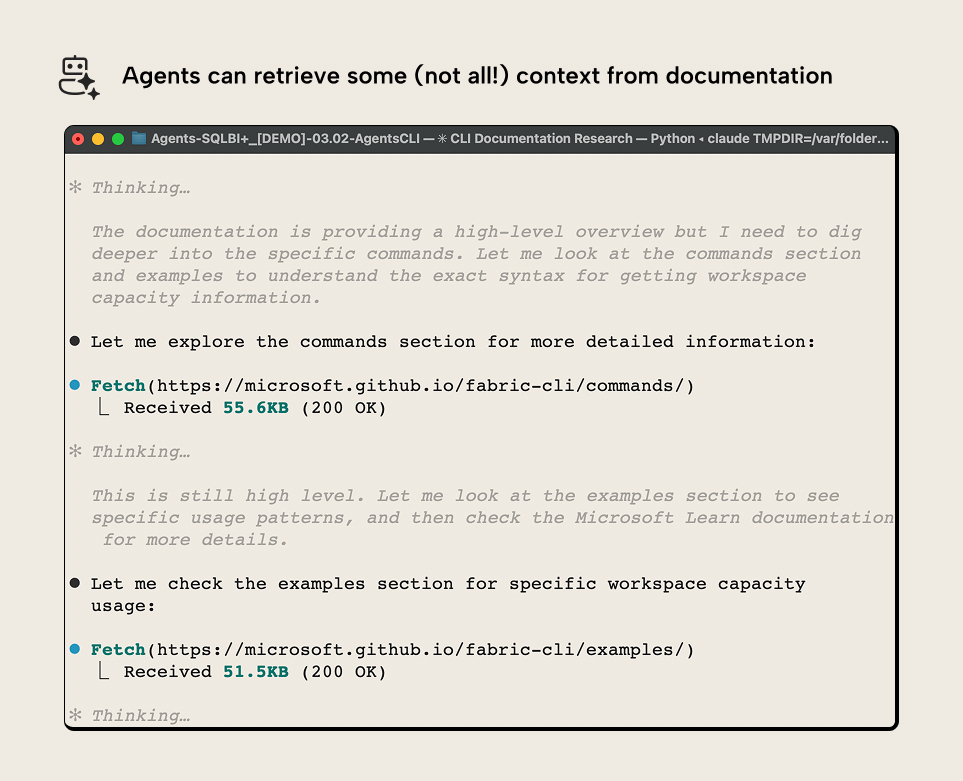

Instead, we can delegate this task to an agent. To do this, we need to first make sure that we have the Fabric CLI installed, and that we can use a coding agent (like Claude Code in this case) who can access the CLI. We also must provide the coding agent some context about how to use the Fabric CLI, which is a new tool not present in LLM training data.

If we don't provide this context, then the coding agent is likely to make a lot of mistakes:

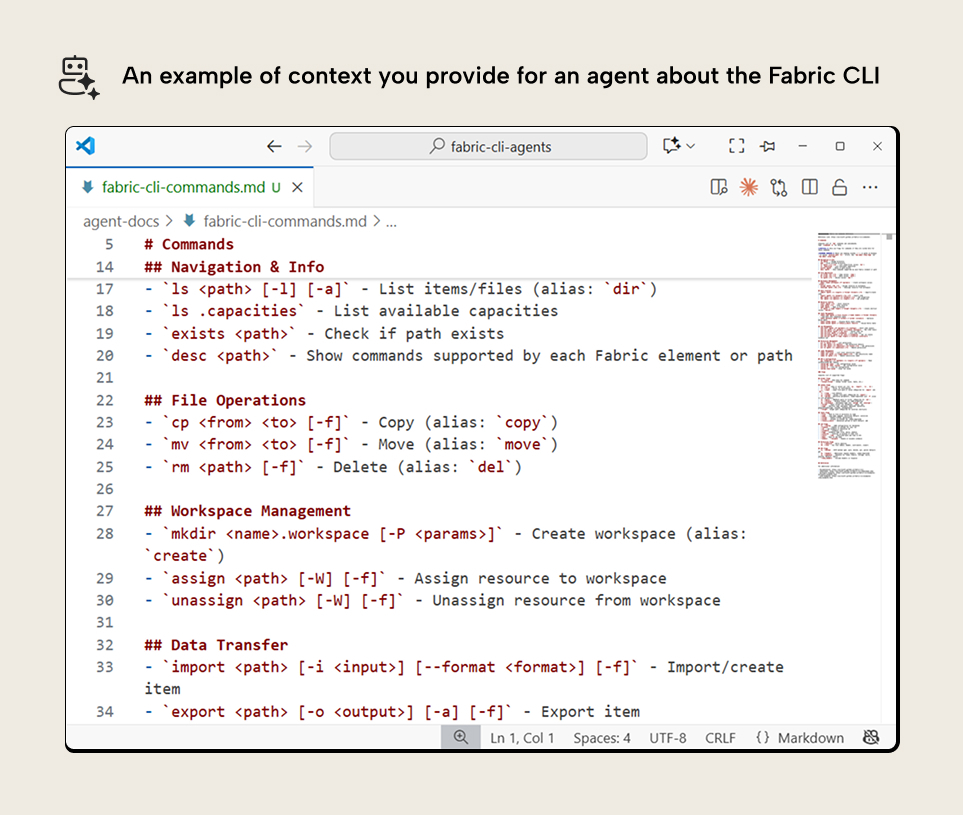

Note that this works for generic context and examples. However, you run the risk that the agent inefficiently spends time and tokens on context and examples that might not be relevant to your scenario. In this case, it's far more efficient to provide some simple step-by-step instructions and example commands, ourselves in a markdown file.

Once we provide the context, we can prompt the agent. We ask the agent to check the number of workspaces in our tenant, and how many of these workspaces are on Fabric Capacity. We ask it to examine each workspace one-by-one, identifying the owner and workspace contents, and to save this information in a summary markdown document that we can review, later.

Once the agent starts on the task, we can leave it alone and continue working on other things, until it is done. You can see an example below that shows a coding agent using the Fabric CLI to survey the workspaces:

Scenario two: Parallelization of tasks

A key advantage to coding agents is that you can have multiple terminals active at once. Basically, multiple coding agents can be working at the same time on similar or related tasks. When you plan and manage this well, it can lead to dramatic increases in productivity.

The example here involves the migration of the Fabric Trial workspaces from the previous scenario. We need to go through each trial workspace one-by-one and decide whether it should be migrated to a Premium-Per-User (PPU) workspace, a Fabric F2 workspace, or archived and deleted. This isn't a decision that we can automate, but doing this manually in the user interface of Fabric would be… painful.

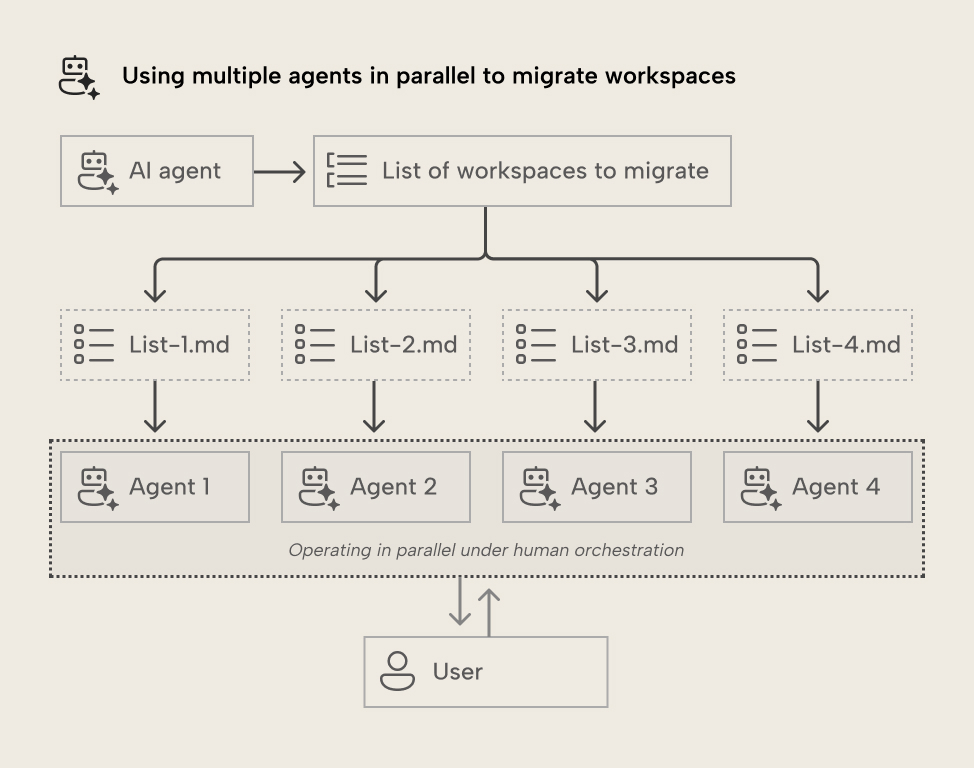

So, we can ask an agent to divide the Fabric Trial workspaces into four separate lists, which four separate agents can work on, simultaneously. In theory, you can scale this up as much as you like, but since we must supervise all agents at once, let's keep it simple.

The following diagram shows a high-level overview of how this works:

In summary, all four agents work in parallel on separate lists of workspaces. Each agent works one-by-one through each workspace on their own respective list, investigating the workspace contents, and asking the user whether to migrate (to PPU or Fabric F2) or archive and delete the workspace. The user supervises and responds to all four agents at once, focusing only on the agents which require user input one-at-a-time.

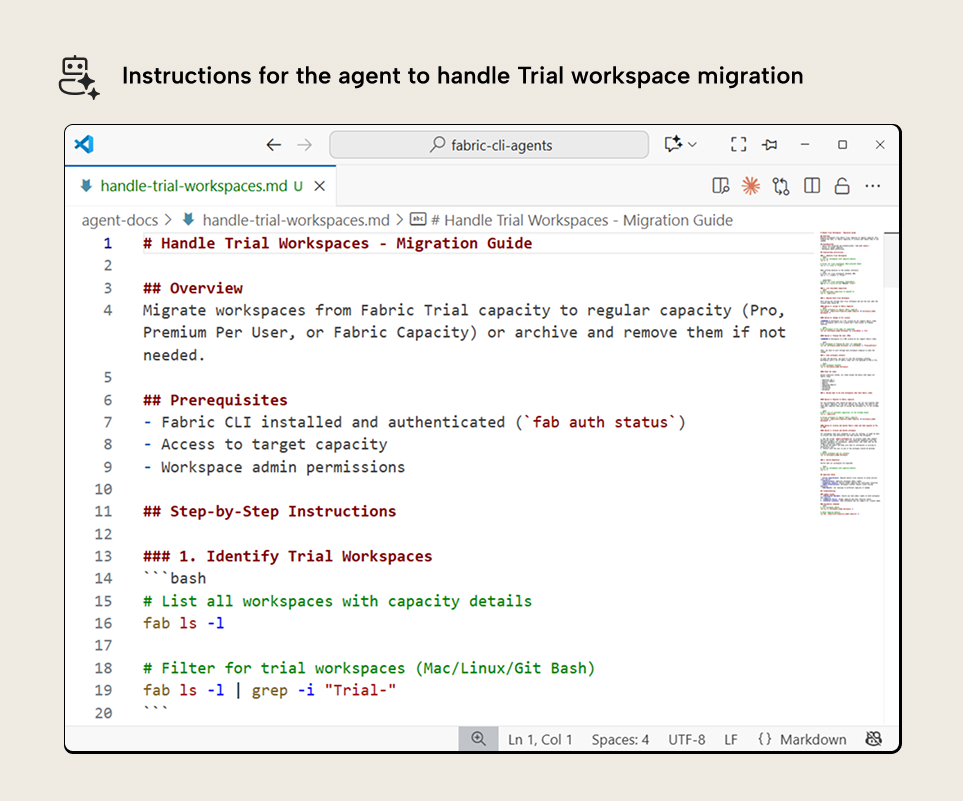

After creating these lists, we must create a re-usable prompt to explain what we want the agents to do. The goal is to have the agents surface the workspace contents, but for us to decide whether we want to migrate or archive the workspace. We just need to make the decision, and it will take seconds for the agents to retrieve relevant information or perform the migration, themselves.

Basically, this re-usable prompt is a series of stepwise instructions explaining how to investigate, migrate, and archive/delete workspaces. These instructions include the steps, but also the technical commands with the Fabric CLI. They might also include references to other supporting tools or scripts for the agents to use. As you can see, the instructions don't need to be long or detailed (and don't take long to write). They're just sufficient for the agent to perform the task:

These instructions create consistency between the agents and help ensure that they use the same commands. Creating re-usable, composable prompts like this is an effective and common pattern for using coding agents.

Once we have the prompt, we can start each agent on the task, supervising and instructing them when needed. The result is that we have four separate workers who:

- Investigate each workspace, surfacing its contents and asking us what to do.

- Perform migration or archival / removal of the workspace based on our answer.

- Handle any archived files before moving on to the next workspace in the list. For this example, the agent is using a script which will automatically download all definitions, metadata, and other relevant information before removing the workspace.

You can see an example of this below:

Note that there's no reason to explicitly use four terminals. You could do this with two or just one, if you prefer. The main benefit of the parallelization in this case is to reduce downtime spent watching the agent work. However, you could also do other, related tasks rather than working on different “sections” of the same task.

The migration in this scenario takes about fifteen minutes to complete despite a human making all decisions. The investigation and migration are effectively automated by agent workers, even when ad hoc scenarios arise, like the occasional unplanned migration to Pro workspaces or consolidating workspaces by copying then removing items.

The migration is complete, but we might want to set up a new workspace to facilitate automated monitoring of our tenant and track changes to workspaces and license modes over time. You could create the workspace, items, and their definitions entirely by using the coding agent and the Fabric or GitHub CLIs, but that goes beyond the scope of this single article. We might show you how to do that in a future article, if you are interested… just let us know.

How do I set this up?

To set this up, you need to have access to a coding agent, which usually requires a subscription (like for Claude Code or others). Many of these agentic coding tools are strictly regulated in enterprise environments, and not easy to access. However, more organizations are supporting these tools, which have special enterprise subscriptions and support, including options that address concerns about data security and privacy. So, it's potentially worth exploring the possibility with your provider-of-choice.

Furthermore, as we mentioned above, you need to invest some time and effort to establish and curate context for the agent. Since the Fabric CLI isn't present in LLM training data, the agent won't know how to use it out-of-the-box. Instead, you need to provide some specific instructions and examples, and link to the documentation. This is especially important for more specific or complicated tasks.

It's important to note that the Fabric CLI has many conventions and commands that resemble other CLIs and terminal commands. This is both advantageous and disadvantageous, because it means that the agent can recognize and run with various patterns, but can also get confused with identically named commands that are in other CLI tools… but that work differently.

Basically, this amounts to curating a set of markdown files with re-usable prompts and documentation specific to your use-cases and scenarios. Do not rely on AI or agents to create these markdown files, either, as the result will often be too verbose or unspecific to get good results.

Finally, you want to make sure that you set up diligent and effective guardrails to prevent the agent from mistakenly enacting destructive operations, like deleting or overwriting existing items or entire workspaces. One way to do this is to pre-emptively set up a permissions file that sets all potentially destructive commands (like rm or set) to “always ask”. You can see an example of this below for Claude Code:

When and why is this not useful?

This agentic approach works best with tasks that have a lot of repetition, and which would be tedious or difficult to do manually. They also work well when there are specific, focused tasks that you can delegate and supervise. While some repetitious tasks can be scripted or automated without AI, in this scenario, there's ad hoc decision-making that must be made. As such, using the AI makes a lot of sense, since the human can make ad hoc requests or adjustments depending on the information they see.

In contrast, agentic approaches do not work well when there are complex, multi-step tasks that require regular input, iteration, or intervention. For instance, it would be a waste of your time to try and create a semantic model or report from scratch with an agent that uses the Fabric CLI. Instead, focus on specific things that the agent can do to help you, such as:

- Investigating and troubleshooting the refresh history of a semantic model, identifying possible bottlenecks in the Power Query (M) code of partition expressions in Import models.

- Facilitating deployment and testing of a semantic model from a local environment or a remote repository to a Fabric workspace.

- Structuring or organizing workspaces, like separating models and reports into their own folders, workspaces, and (via separate workspaces) deployment pipelines.

Furthermore, you of course always want to avoid using these agentic approaches in high-risk or business-critical scenarios. Use them to augment your workflows and capabilities, rather than trying to replace what already works, today.