Borp is now feeling far more comfortable finding their way around Databricks. They understand the requirements for their business users, has a good plan for the semantic model they want to build first, and they understand what data they need and where to find it in Databricks.

So, they should be good to go now, right? Well, not quite. There are still a couple of bits they should make sure are set up before they get going.

SQL Warehouse

In the world of data lakehouses you’ll often hear the term “separation of storage and compute” bandied about. This means that the storage of data in files, is kept separate from the compute engine you will use to query this data. This gives you freedom of choice in terms of what engine you use and for which tasks.

Databricks has a few different compute types built into it. Clusters are generally used for data engineering and data science work, and these can be created with different configurations depending on what they are going to be used for.

But the SQL Warehouse compute type is the one that is optimised for use with BI workloads and is the recommended engine for working with Power BI.

Before building a semantic model, you should ensure that the SQL Warehouse (or perhaps Warehouses, as you can have more than one set up) has a suitable configuration.

There are 3 things to think about:

- The type of SQL Warehouse. This can be Serverless, Pro or Classic

- The cluster sizes in the SQL Warehouse

- The number of clusters.

Warehouse type

Serverless SQL Warehouses are provisioned on demand. Whilst to the naked eye the cost of these warehouses might be more, the fact they are ephemeral and spin up and down rapidly means that often they require less uptime overall, which offsets this cost. Serverless is the most flexible and feature rich option, including features such as the Photon query engine, Predictive IO and Intelligent Workload Management. It is currently the default for new Databricks workspaces.

If use of your warehouse use is going to be constant and more predictable, you may wish to opt for a Pro warehouse. Whilst these are cheaper to run per hour, the longer spin up times mean they tend to be left on for longer, which drives up their cost. These warehouses are less suited to on-demand or inconsistent usage patterns.

Classic SQL Warehouses are a more basic option with only entry level Databricks SQL features. Whilst these can be ok for rudimentary exploratory work, it provides only entry-level performance.

Full documentation on SQL Warehouse types is available here.

Cluster size

Cluster size refers to the size of each node used in the cluster. Databricks takes a “t-shirt” size approach, meaning you can select sizes from 2X-Small all the way up to 4X-Large. The size you choose should be dependent on the volume of data you need to query, the complexity of the queries and the level of query latency you expect.

Bigger volumes of data typically require larger clusters, whilst increasing your cluster size can also reduce latency.

Scaling

The SpaceParts SQL Warehouse

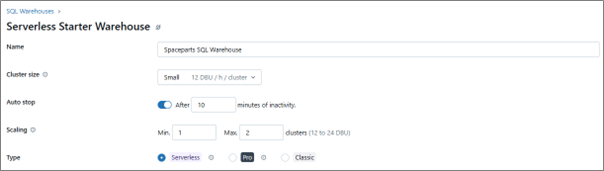

Having discussed it with the data engineering team, Borp decides that a Serverless SQL Warehouse with a cluster size of Small and scaling of minimum 1 cluster, maximum 2 should fit their needs.

They don’t need the warehouse to be “always on” as he will be importing data into Power BI once a day for use by his end users, so serverless feels like a good choice. The data volume for his first model will be relatively modest, with his largest fact table being 17 million rows so SMALL feels like an okay size to start with, and they won’t need much concurrency – the only “user” of the connection will be their Power BI semantic model.

Any of these configuration options can be changed at any point too, so Borp knows that they can monitor the performance of his semantic model refreshes and revisit these settings if needed.

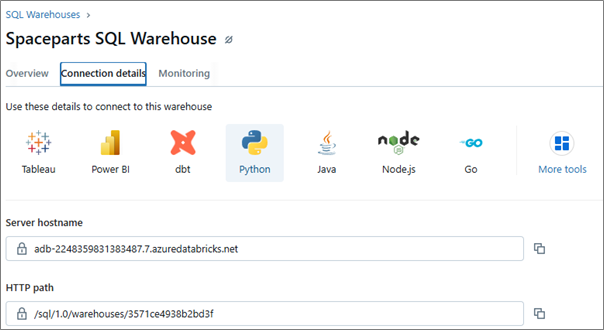

Connection details

Simba Spark ODBC driver

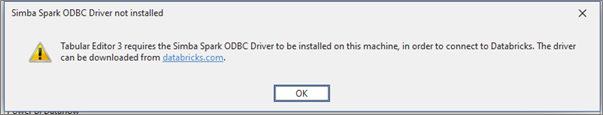

In order for Tabular Editor 3 to connect with Databricks, an extra piece of software needs to be installed, the Simba Spark ODBC driver. Installation of this software is what allows you to browse tables and preview data in Databricks from inside Tabular Editor.

The driver is available to download at https://www.databricks.com/spark/odbc-drivers-download/.

Tabular Editor 3 is only available on Windows operating system, so you’ll want to download and install the Windows version of the driver. This is a Databricks approved driver and is used for connectivity between Databricks and several other major pieces of software.

If you do not have permissions to install on your machine, you may need assistance from your IT department. Further documentation relating to the driver is available here.

Next steps

Borp’s Databricks SQL Warehouse is configured, they have connection details, and the Simba Spark ODBC driver is also installed on their computer alongside Tabular Editor 3.

In the next part of this series, we’ll look at the steps you would take to build your first semantic model on Databricks, as well as provide some handy C# scripts to help take your semantic modelling on Databricks to the next level.

.jpg?width=50&height=50&name=Johnny%20Headshot%2005%2013%20Low%20Res%20(10%20of%2013).jpg)