Borp is feeling quite confident in finding their way around Databricks and they’ve done all the pre-requisite steps required to start using Tabular Editor 3 with Databricks.

Now, it's time to get their hands dirty and start building their first semantic model!

Tabular Compability

It's first worth noting that only Tabular Editor 3 includes the Databricks data source connection type. It's still possible to use Tabular Editor 2 with Databricks, but you'll either need to hand code (or somehow script) the import of tables and columns, and you'll miss out on features such as being able to browse and preview tables. An alternative is to import tables using Power BI Desktop and then use Tabular Editor 2 for further development once tables are set up.

But then of course you're still missing out on all the other great features and benefits of Tabular Editor 3, such as the live development environment, DAX Debugger, and macro recorder (just to name a few).

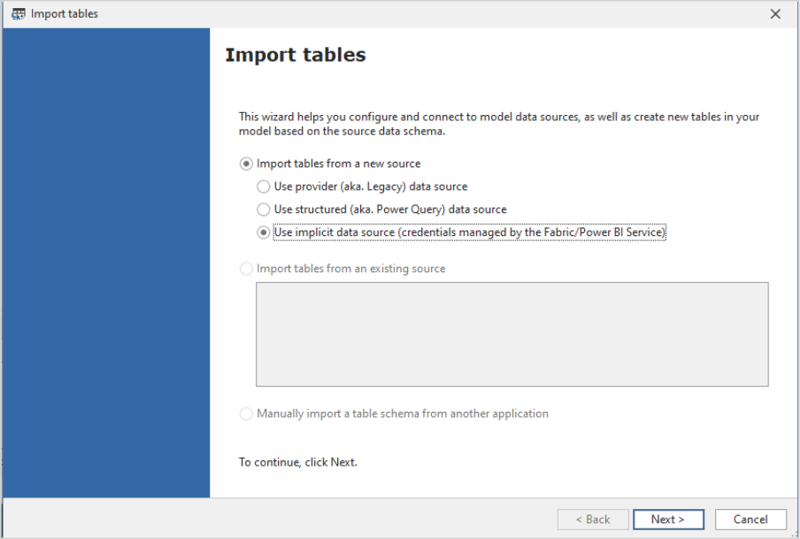

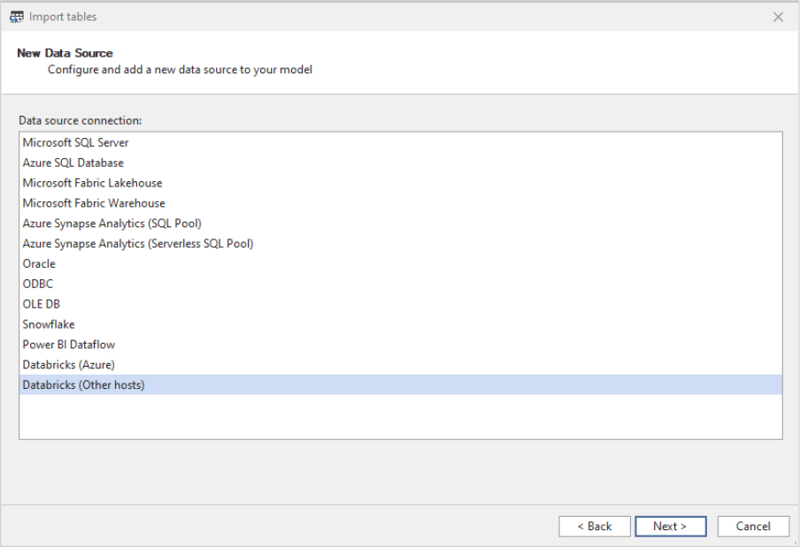

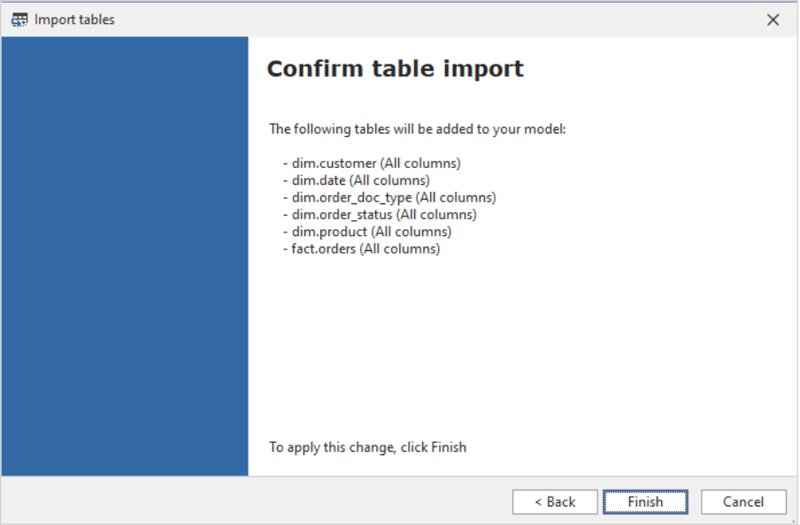

Importing your tables

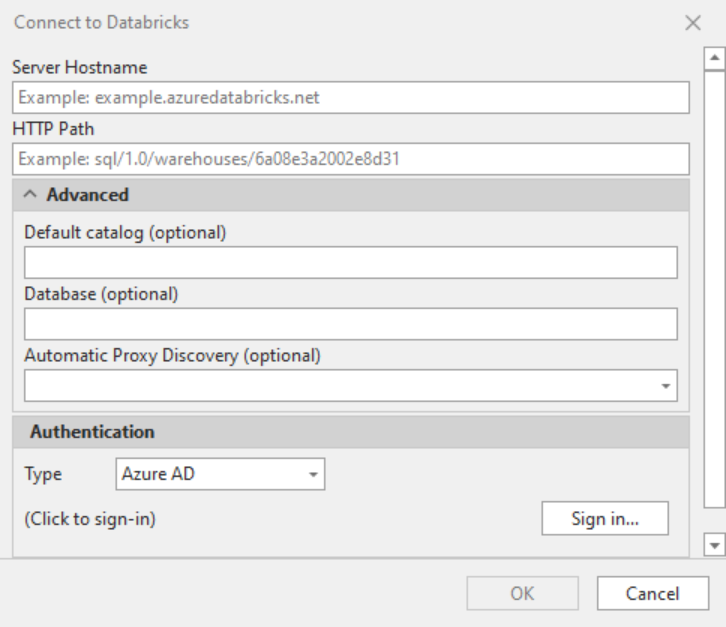

You'll also need to choose an authentication method. Full documentation on how to authenticate Tabular Editor 3 with Databricks is available here. Whilst doing development work, using single sign-on with Azure AD (now called Microsoft Entra ID) is a convenient option, but this may not be set up for non-Azure services.

An alternative is to use an Access Token. An Access Token (labelled as Personal Access Token in Databricks) is a short lived (default of 90 days, but it can be changed) authentication method, that can be generated from the connection details page of a SQL Warehouse.

.png?width=313&height=96&name=GetImage%20(3).png)

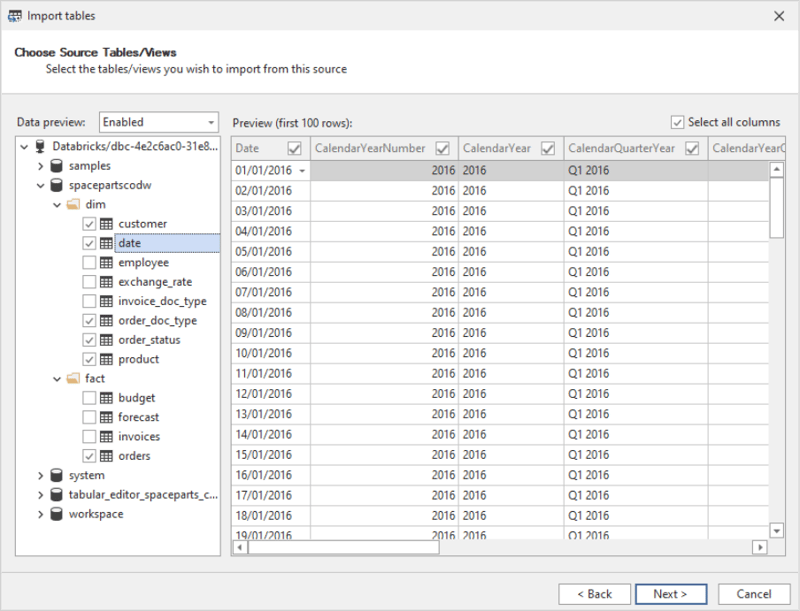

The next page of the wizard will only allow the option of 'Choose from a list of available tables and views', though here you can choose to use DirectQuery if you wish.

After this, you'll be directed to a dialogue pane that allows you to browse Unity Catalog and select any tables or view you wish to include in your semantic model.

Developing your model

From here, you can develop your semantic model just like you would with any other data source.

However, would this really be a Tabular Editor blog without giving you an extra helping hand with some scripts to help you turbo charge your model development?

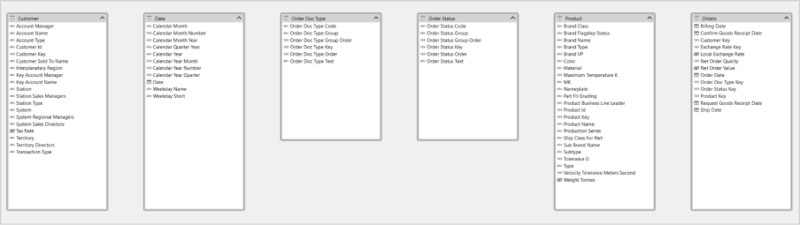

Friendly naming

In Databricks Unity Catalog it's not possible to use capital letters in table names. A common way to make tables names more readable without using capital letters is to adopt snake_case. Also, whilst column names can contain spaces, it is often advised against as these can be cumbersome to work with, meaning data engineers most often use snake_case, camelCase or PascalCase.

However, we want users of our semantic model to see business-friendly names in our model. This script will loop through all tables in the model and make sure friendly, Proper Case formatting is applied.

Whilst doing this, it'll also apply some best practice recommendations, setting default summarization for all columns to none and also setting format strings for DateTime type fields (this script is set up to use format 'yyyy-mm-dd' but you can alter the script at line 61 if you prefer)

This script isn't strictly for use with only Databricks – use it with any model you like, regardless of data source, but it has been built with some of the limitations of Databricks in mind.

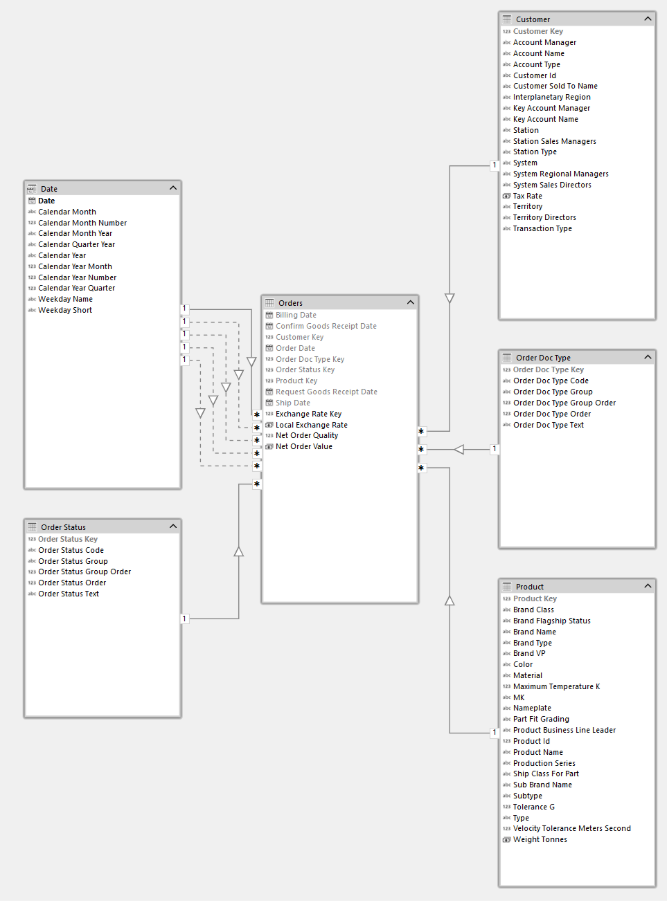

Importing relationships

If you remember back to part 3 of our Databricks series, you may recall that we can define primary and foreign keys in Unity Catalog, and there's even an ERD view that you can use to see these relationships more graphically.

Rather than manually creating these relationships in Tabular Editor, wouldn't it be great if we could re-use the information in Unity Catalog to automate this? Well, we've got your back.

Using this script, you can detect Foreign Keys on a table and then create relationships back to the relevant tables where they are present in the model. You must select the tables with foreign keys to do this (typically a fact table, and in our example the table fact.orders)

When you execute the script, you'll be prompted for a Databricks Personal Access Token. This is so that the script can authenticate back to Databricks and retrieve the relevant information from Unity Catalog. Once completed, you'll be prompted with the number of new relationships created to your selected table. You can run the script as many times as you like. It will only ever create new relationships where it finds they are missing from the model.

For role playing dimensions, where the same table might have multiple foreign keys relating to a single table, the first relationship detected will be the active one, and all other subsequent relationships are created as inactive. You may want to revisit these particular relationships to make sure the correct relationship is active.

Whilst importing the relationships, the script will also hide primary and foreign keys and set IsAvailableInMDX to false (with the exception of DateTime type primary keys). Primary keys are also marked as IsKey = TRUE in the semantic model.

The script utilises the information_schema tables in Unity Catalog to retrieve this information, so you may need to double check with your Databricks administrator to make sure you have permission to query these tables.

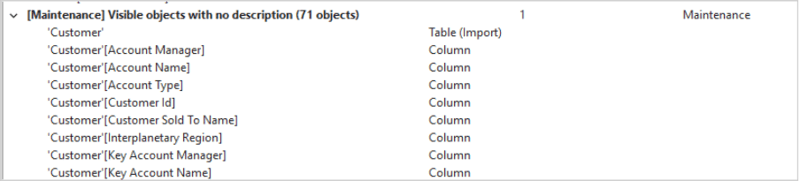

Metadata descriptions

Adding descriptions to all your tables and columns can be a somewhat tedious and thankless task. There are some nifty scripts out there that can use AI to do this job for you. But hang on a sec... Back in Part 3, didn't we see that all these descriptions were all available in Unity Catalog already? Is there no way we can recycle those?

Well... there is now!

This script loops through all selected tables (you can select more than one by holding down the shift or ctrl key) and retrieves the descriptions for both the tables and all its columns from Unity Catalog and brings them into your Semantic Model.

Again, you'll be prompted for a Databricks Personal Access Token to run the script, and this script also relies on your having access to query the information_schema. Whilst running the script you'll get a notification for each selected table to let you know which descriptions have been updated and how many updates have taken place.

Deploying the semantic model

There are many different paths to deploying your semantic model. You can do it manually from Tabular Editor or you can create automated CI/CD processes using tools such as Azure Dev Ops and GitHub.

The OG pattern was outlined in a series of blogs by our very own Daniel Otykier, and my colleague Liping Huang recently presented on the subject, specifically using Databricks, at 2025's edition of SQLBits – I'm looking forward to the video of that session dropping in the near future.

Regardless of the process Borp decides to follow, there's one last thing it's worth mentioning, and that's setting up credentials once the semantic model has been published.

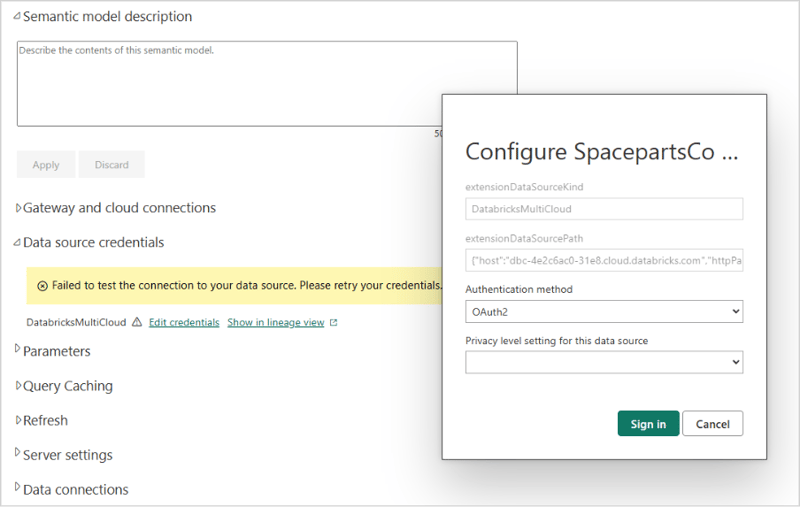

Once deployed, you'll need to specify the authentication type and credentials.

If your Databricks instance is on a private network, this may involve setting up a gateway. And you might also decide to set up the connection using cloud connections, rather than directly on the semantic model.

Selecting OAuth2 authentication here will tie the semantic model to the user who sets up the credentials, which means if they leave the organisation, authentication is likely to start failing.

Instead, it's better to use the 'Key' option. What the Key option actually is, is an Access Token. Rather than use a personal access token, it's more robust to use an access token associated with a Databricks service principal. You should speak with your Databricks administrator about getting this set up.

Once done, you can set up and configure your model refresh as you see fit.

That's all folks!

A few weeks ago, the world of Databricks was big and scary for Borp. However, over time they've realized there's nothing to be afraid of. Many of the skills he learned using other relational databases and data warehouse products are very transferrable to Databricks, and once up and running with how to find their way around, they've really relished the chance to work with the new Spaceparts Co data platform.

They have a good understanding of the concepts behind Databricks and Lakehouses, they're comfortable exploring data in Databricks using features such as Unity Catalog and Databricks SQL, and once they'd configured a SQL Warehouse for their use and set up additionally software to allow them to use Tabular Editor with Databricks they were well on their way to building their first Databricks semantic model.

Armed with some new C# scripts that'll help them really lean into some of the game changing Databricks features, Borp is now in a fantastic place to build better data models faster.

And if you've been following along, hopefully you are now too!

.jpg?width=50&height=50&name=Johnny%20Headshot%2005%2013%20Low%20Res%20(10%20of%2013).jpg)