Data security is a sensitive and important topic important for every tool, not just Power BI. You need to make sure that unauthorized individuals can’t access information that isn't meant for them, both in and out of your organization. When data leaves or “leaks” out of your organization, it’s called data exfiltration. This can be a big problem, with big consequences!

You want to avoid data exfiltration, especially regarding sensitive information, and when you must adhere to strict security and compliance guidelines. There are some obvious things that you should do to protect your Power BI data, which (we hope) all Power BI teams are aware of:

- Avoid sharing reports via publish-to-web, which makes them available on the public internet. You can see many examples of this by simply searching on Google site:app.powerbi.com “margin” or other search terms. Alongside the many portfolio and sample reports, you will likely find real reports from people making this mistake.

- Follow the principle of least permissions: Only give people the lowest level of access to do what they need to do and see what they need to see. Giving business users “member” or “admin” workspace roles so that they can view reports is asking for a problem. Most users only need viewer permissions.

- Avoid over-sharing items with people who should not access them and regularly audit both access (who can access what) and permissions (what they can do with it).

- Avoid neglecting or ignoring data security when you share a semantic model or a connected report. Data security (for semantic models) lets you place role-based access with either row-level security or object-level security. Row-level security ensures that people only see what they are authorized to see, while object-level security (sometimes called column-level security) returns errors when visuals or queries attempt to access the object. Note that data security exists on top of model-level permissions.

- Monitor Export-to-Excel and find ways to educate users about how to work with and analyze data in Excel responsibly. Exports from paginated reports are also important to know about.

- Consider sensitivity labels for scenarios where you have higher sensitivity or compliance requirements. Sensitivity labels in Power BI encrypt the contents of Power BI Desktop files and exports like Excel, PowerPoint, or PDF. This is useful because otherwise users could distribute these files and thus share their contents, which can contain sensitive information. However, sensitivity labels are incompatible with many Power BI features, like Git integration, PBIP format, and more. You also cannot programmatically alter files with sensitivity labels (including using AI and MCP servers) unless you simulate UI interactions.

To reiterate, hopefully most of you already know and follow the above practices and principles where they apply to you. These are some simple things to ensure data security and avoid exfiltration. However, Power BI and Fabric are complicated; there’s a lot to consider, and some surprising ways that your data might surface, unexpectedly.

In this article, we give a short overview of five different ways that this can affect you.

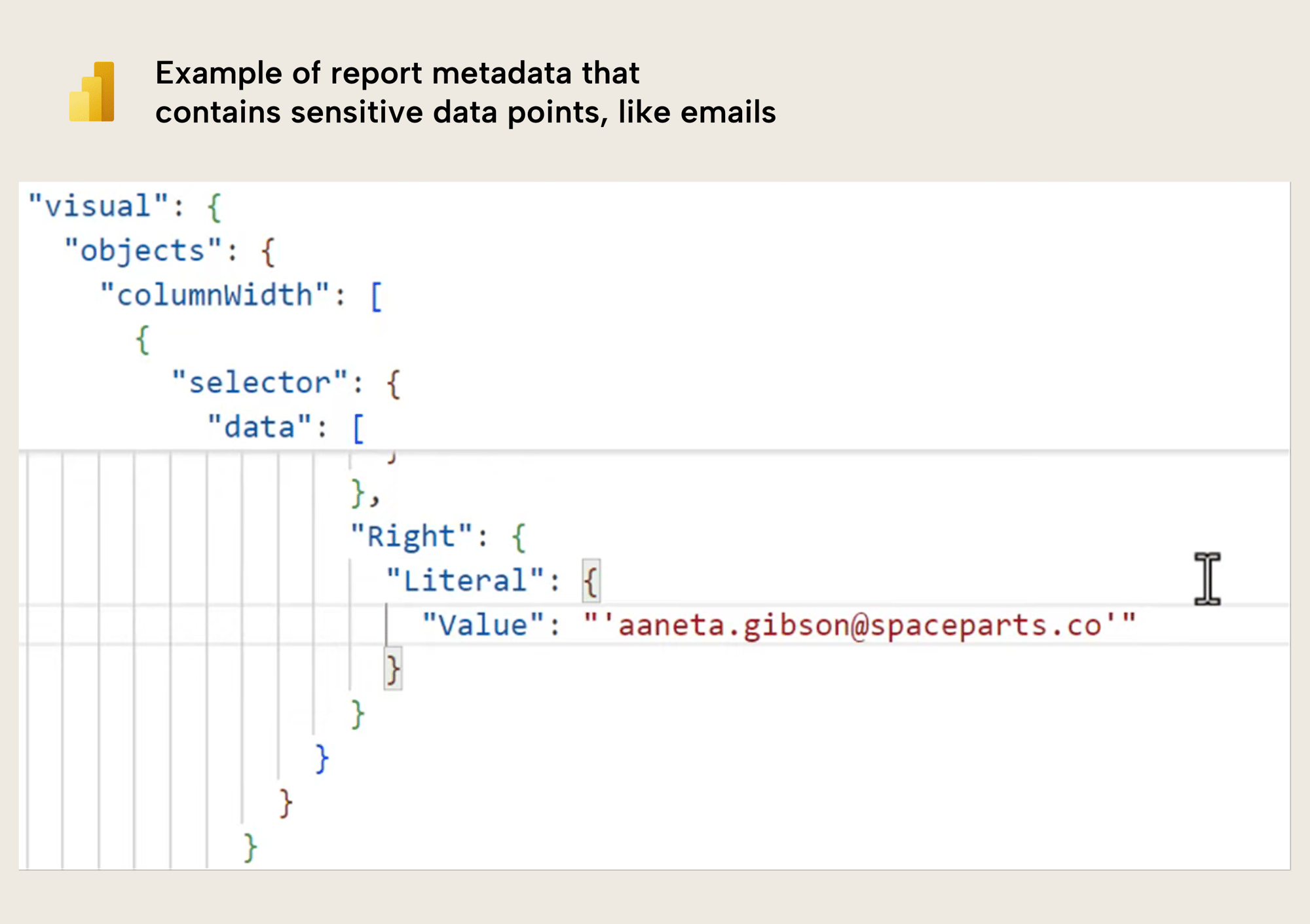

#1: Data points in report metadata

This happens irrespective of how you save the files: PBIX, PBIP, PBIR, PBIT… any format exhibits this behaviour, because it is an intended design. Unfortunately, that means that anyone who has your Power BI files can – even if they do not have access to the model – view potentially sensitive information.

What’s worse, is that in rare cases, visual config – including these data points – can persist in report metadata, even when you disconnect a report, or re-bind the report to another semantic model. This means that Power BI files shared by you, a consultant, or others could contain data points from past semantic models that the report was once connected to. If you download some publicly available templates, you will likely see examples of this, unfortunately.

That said, the data points saved in the report metadata are typically innocuous on their own. Still, this can be problematic in the following scenarios:

- When you save reports to source control or cloud storage.

- Any scenario when you share Power BI report files in any format.

- Any scenario when you export Power BI report files in any format.

The only way to prevent this behaviour is to use sensitivity labels.

#2: Having sensitive information in a publish-to-web model

Let’s say you have a report that you want to publish-to-web and make publicly available. The model contains data that you want to disclose, but sensitive or personal information that you do not. So, you make the report that only includes the insensitive data. The question is… can someone “bypass” the report and view the underlying model data?

Yes, they can. If you share your report via publish-to-web, it is possible for someone to inspect the report and retrieve sufficient information to query it via the public APIs. This means that they can access any information in your model. This is trivial by using AI. You can see a video demonstration of this here, which demonstrates using a Python notebook to extract data from a semantic model that isn’t exposed in the publish-to-web report.

To be clear: this is not a security flaw; this is also by design. If you share a report via publish-to-web, then you must be certain that the underlying model has no sensitive information.

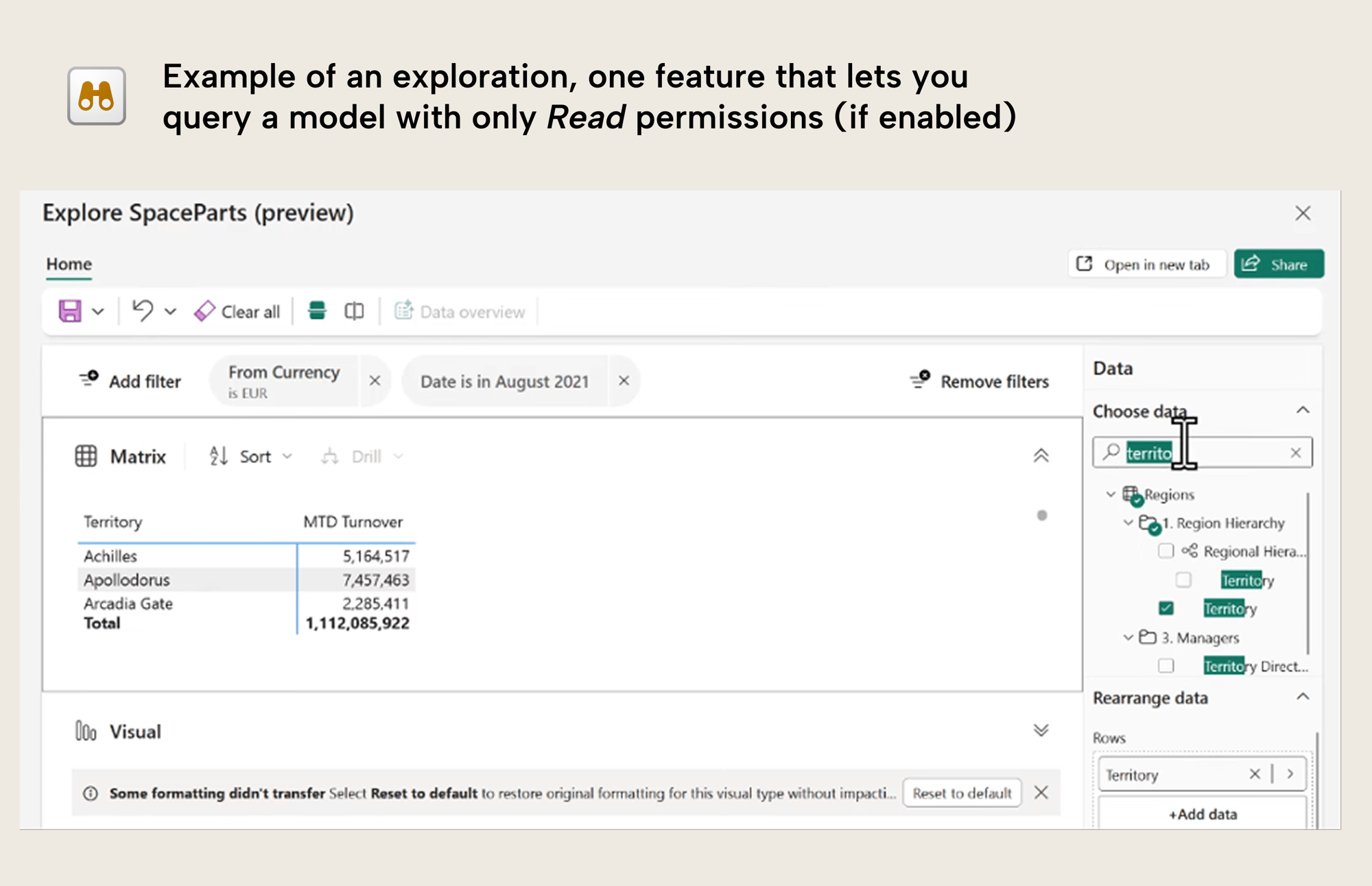

#3: Assuming report readers can’t view model data

Related to the previous point, it is a common assumption is that if someone has only read access to a report, that they can’t view the underlying model data. The assumption is that the person only has access to the data in the report. Unfortunately, this is false! This can become especially problematic when you share data with external (guest) users.

There are several Power BI features which allow a user to easily retrieve the data:

- Personalize visuals: If you enable personalize visuals in reports, then users can customize visuals and view the model, therefore querying the data from the adjusted visual.

- Explorations: Since early 2025, if the tenant setting is enabled, then users can use explorations on data models that they only have read access to.

- Copilot: If you have an F2 SKU and Copilot is enabled, users can use Copilot to ask data questions about the model from a report.

The following image shows you an example of an exploration open by a viewer with only read permissions:

Note that all of these techniques still respect data security and permissions. However, model authors and developers sometimes erroneously think that providing read access to a report does not give read access to a model; this is incorrect. Lack of build permissions do not restrict access to data; it restricts access to certain UI elements and tools that users can use with that data.

Users can also use the same method as #2 to retrieve the data programmatically.

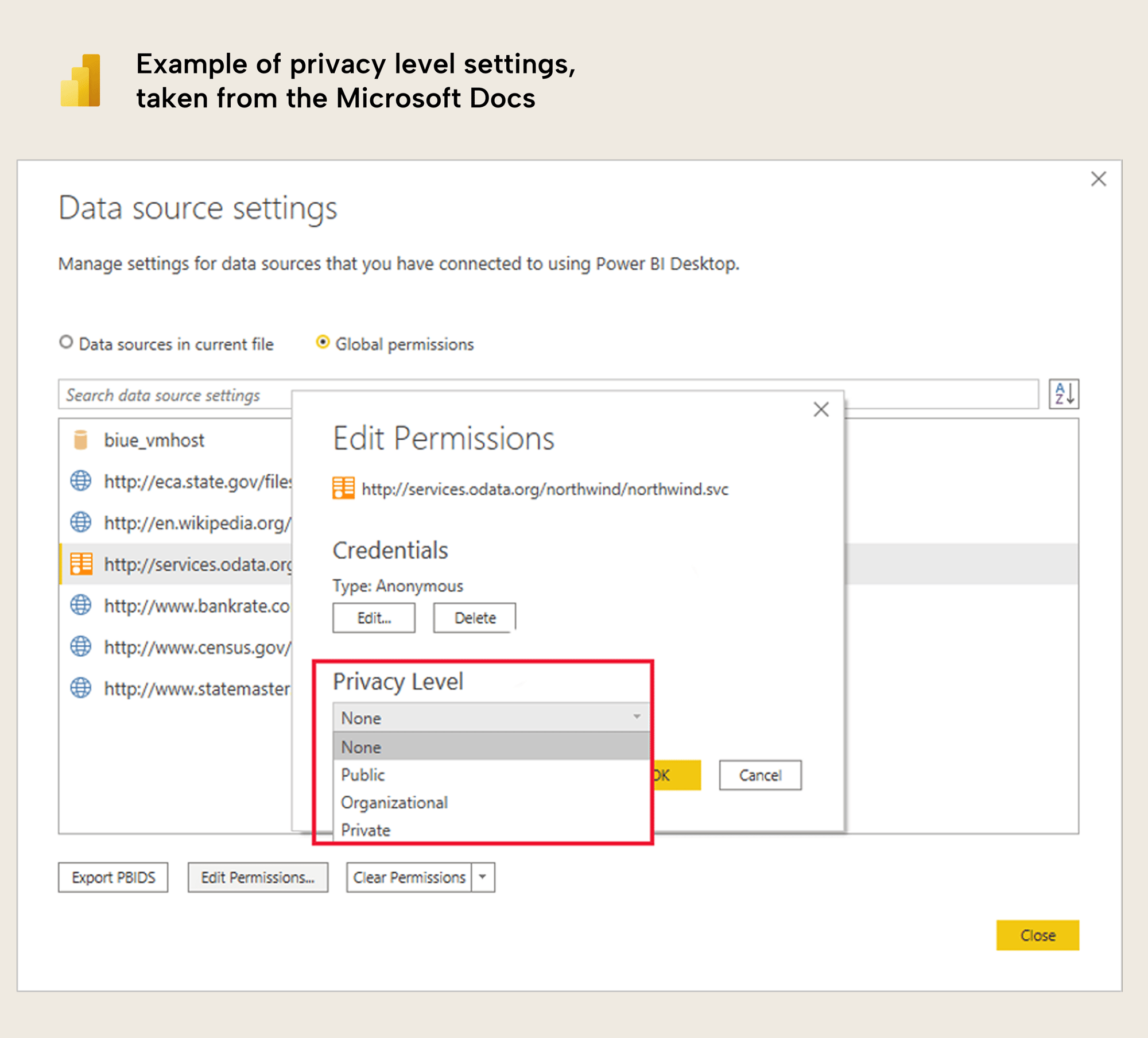

#4: Privacy levels and query folding

Image from Microsoft Documentation: Power BI Desktop privacy levels

This has always been a point of confusion and contention, especially because there are certain scenarios and features which can – with certain privacy levels – result in errors. Unfortunately, the guidance that you will find online with these scenarios will often tell you to set the privacy level to none or to public. However, this can result in “accidental data transfer”, which is described here. This happens when information from a private source is mistakenly sent “…outside of a trusted scope” during query folding. However, that does not mean that you should always avoid changing the privacy level; this might still be a valid option, depending on your data sources and scenario.

Chris Webb has a good series of blog posts about this topic from 2017 that is still very relevant and useful.

#5: Exporting outputs from a notebook

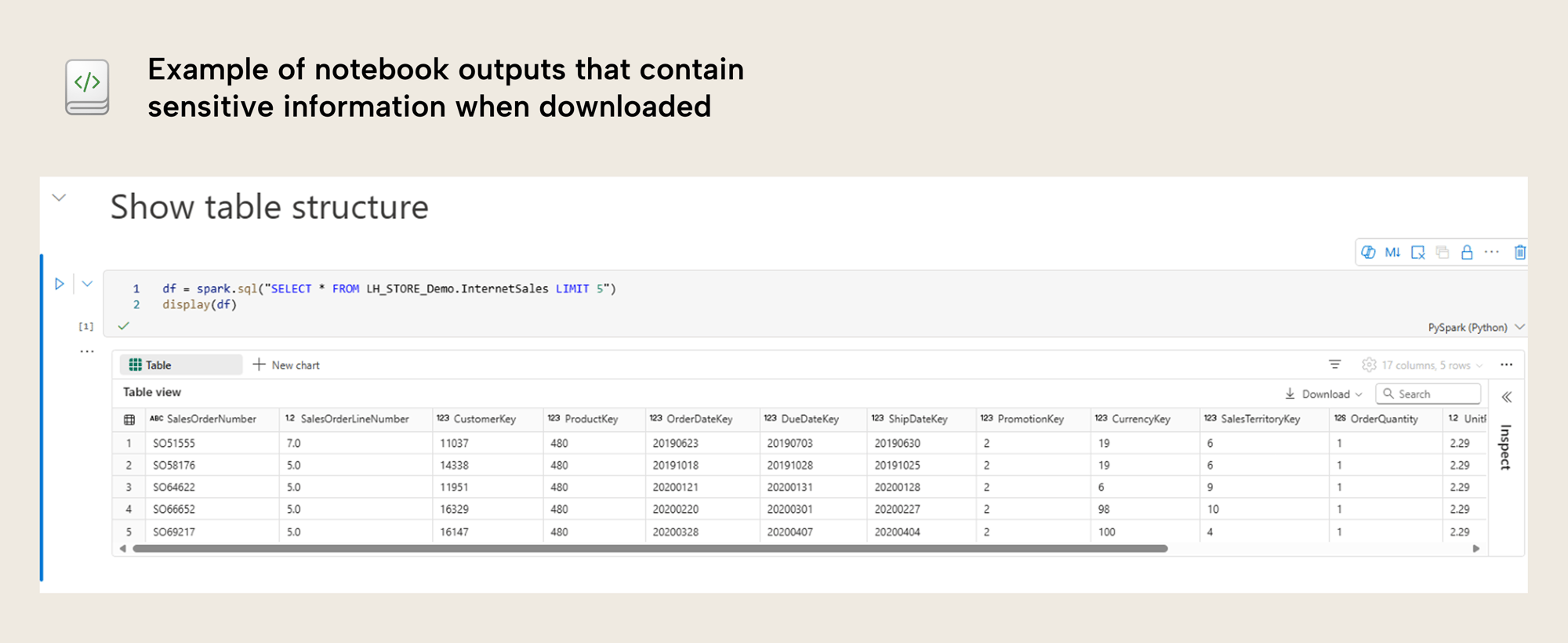

Exporting Notebooks in Microsoft Fabric is a common way to reuse templates, move work between environments, or share examples with others. But there's an important risk to be aware of: your Notebook export may contain data — not just code.

When you export a Notebook as a .ipynb file, any printed output from previous runs (such as df.show() or display(df)) is included in the file. This means that even small data previews like the first few rows of a dataset are saved directly in the exported file.

This can be especially risky when working with sensitive or confidential data. You might think you're sharing only logic or structure as there is no warning up front. However, you could be unintentionally exposing information.

Even if sensitivity labels are applied in Fabric, they are removed during export to ipynb files. After importing the Notebook into another tenant or workspace, those printed results will still be visible right after import.

To avoid this, always clear outputs before exporting, or consider using a different file format such as the Python (.py) export option. This option does not include data, but is again limited in functionality. Where as the ipynb files keep the notebook structure split in multiple code cells, this formatting is removed when using alternative formats.

In conclusion

Protecting your data is essential when you use BI tools and build semantic models. This can be a complex task, because there are many factors to consider. In this article, we shared five unexpected ways that you might be sharing your data outside of your organization without realizing it. Hopefully, you found this helpful!