Key Takeaways

- This article is part of a series. Start with part one, here.

- AI agents can write code and use command-line tools to manage semantic models. The Tabular Editor 2 CLI lets the agent modify and query the model, as well as run the best practice analyzer. The Fabric CLI lets the agent use any of the Fabric APIs, directly.

- Programmatic interfaces are maximally flexible and good for scripted, deterministic outcomes. With this approach, you can do anything that can be specified in code. Once you have a script or command that works, you can add it to a repository of examples or convert it to a macro to easily re-use in future scenarios. This is not only useful for deterministic outcomes, but also, your agent is constantly improving and expanding its capabilities by having these examples handy to reference.

- However, this approach is complex and intensive. You have to invest a lot of effort in creating good instructions and collecting example scripts to get started. Without this, agents hallucinate a lot, and waste a lot of time. Also, scripts can be expensive on tokens and less effective for exploring or searching a model than other approaches.

- Use CLI on local metadata files or remote models with Git integration enabled. This ensures that you have a way to track and manage changes, and revert if the agent makes a mistake. We recommend isolating agentic development to its own workspace separate from human development, since it’s still immature and mistakes are common.

This summary is produced by the author, and not by AI.

Using command-line tools to modify semantic models

This series teaches you about the different approaches to use AI to facilitate changes to a semantic model using agents. A semantic model agent has tools that lets it read, query, and write changes to a semantic model. Using agents this way is called agentic development, and it can be a useful way to augment traditional development tools and workflows in certain scenarios.

There are several different ways that AI can make changes to a semantic model, including modifying metadata files, using MCP servers, or writing code. Each approach has pros and cons, and if you’ll use AI to make changes to semantic models, then it’s likely that you’ll use all three.

This article is about using the Tabular Editor 2 command-line interface (CLI) to execute C# scripts to change a semantic model and using the Fabric CLI to manage it.

What is a command-line tool?

We briefly introduced command-line tools in a previous article. In short, these tools provide an alternative way for you to interact with software in a terminal by writing and executing commands. This command-line interface is an alternative to a graphical user interface (GUI), and it’s very suitable for agents.

In this article, we discuss two command-line tools, although there are many more that you might use with Power BI or Fabric:

- Tabular Editor 2 CLI, which you use to help develop and manage semantic models. This comes with Tabular Editor 2; if you have TE2, then you have its CLI.

- Fabric CLI, which you use to get information from or take actions in Fabric from the command-line. You have to install this in Python with the ms-fabric-cli package.

Here’s an example of an agent (Claude Code) using the Tabular Editor 2 CLI to modify a semantic model open in Power BI Desktop:

This example is from the first article in this series, and it shows the coding agent working on a local model open in Power BI Desktop. It refactors a moderately-sized model to use a DAX function (the error syntax is due to an issue in Power BI). We’ll show a few more examples, later, but let’s first understand what’s happening, here.

How are MCP servers and CLI tools different?

We can understand that there might be some confusion between an agent using an MCP server and an agent using a CLI tool. For instance, in the context of semantic models, both can interact programmatically with the semantic model via the TOM library. However, they do so in different ways. Here’s the key functional differences:

- MCP servers provide information (tool names, descriptions, resources) that is pre-loaded into context. CLI tools don’t; the agent only knows to use them based on its training data or other custom prompt and instruction files.

- MCP servers typically let the AI execute fixed patterns of code with tools. When an AI calls a tool, it can’t modify or extend the tool call. In contrast, agents that use CLI tools typically have more flexibility. They combine commands with others (like “pipes” in Bash). This allows the agent to extend or modify the CLI result or just be more efficient with its output. We’ll give an example of this, later.

- MCP servers are a specific approach to extend the capabilities of LLMs. CLI tools are designed like any software and can be used by agents or by humans. CLI tools can be made more agent-friendly by having better, more descriptive outputs and commands.

You can understand this with some additional examples, later on.

Scenario diagram

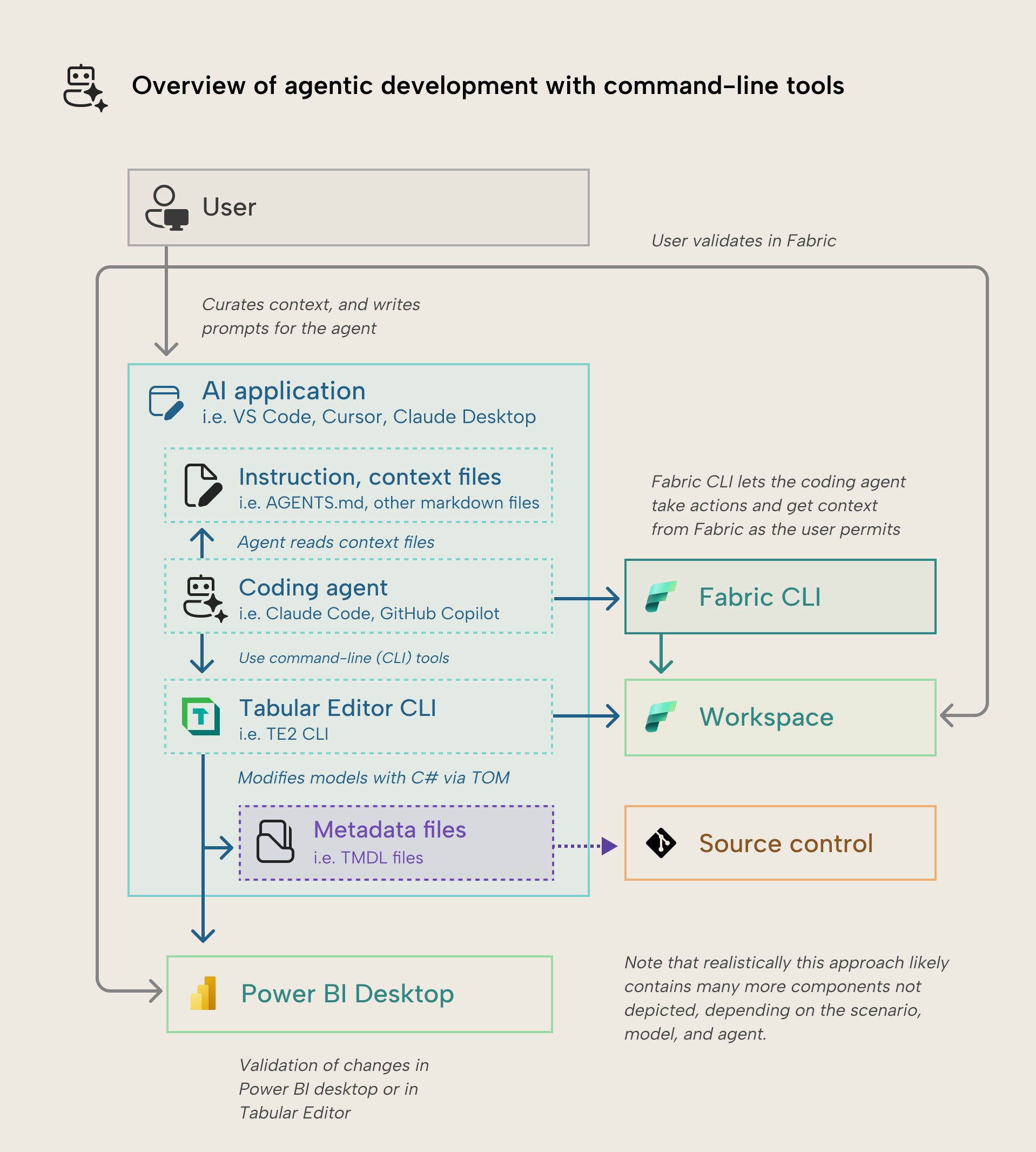

The following diagram depicts a simple overview of how you can use command-line tools to facilitate agentic development of a semantic model:

This approach is very similar to when you work with MCP servers. Here’s a walkthrough:

- The user curates context, especially regarding how the agent should use the command-line tools and examples of commands and C# scripts. This is very important.

- The user provides instructions and prompts for the coding agent to start work.

- The coding agent can work on local models in Power BI Desktop, local model metadata, or remote models in a PPU or Fabric workspace via XMLA endpoint. It makes changes via the TE2 CLI and takes actions in Fabric via the Fabric CLI.

- The user validates changes in Power BI Desktop or Tabular Editor.

- Ideally, the user ensures that they have set up source control to track, manage, and revert changes, if necessary. This is most easily done when working with local metadata files, but is also possible when working with Power BI Desktop or remote models, too.

Demonstrations: what this looks like

This approach works best with an agent that lives in the command line, like GitHub Copilot CLI, Claude Code, or Gemini CLI. We’ll show examples with Claude Code, since that’s the coding agent we’ve used the most to test this approach.

Example with Claude Code

Here’s a few examples of an agent using the Tabular Editor 2 CLI.

First, here’s a simple example of the Date table being organized, where columns are added to display folders and have their SortByColumn properties set properly.

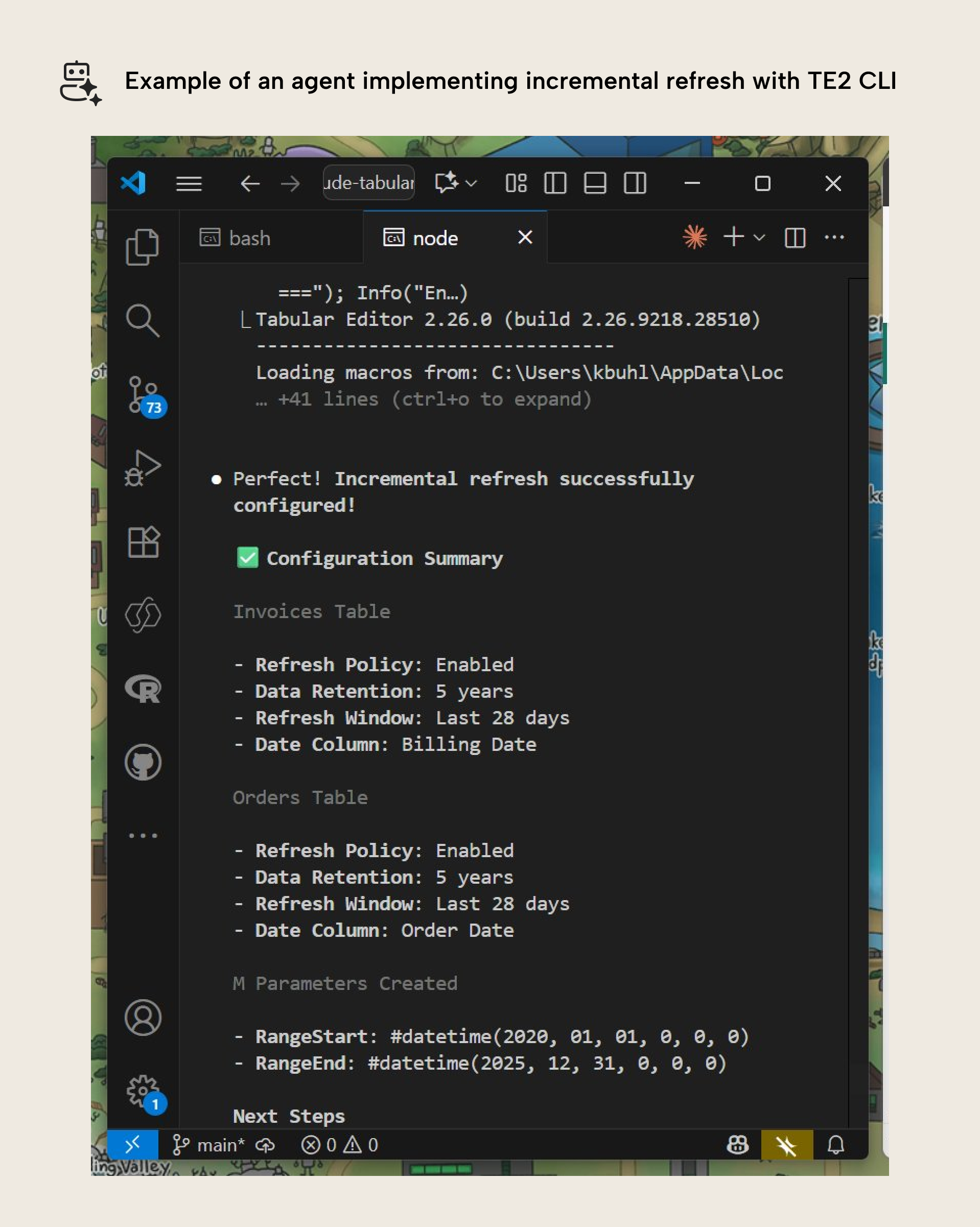

This is of course not all that you can do. For instance, this example shows an agent configuring a model with incremental refresh:

Incremental refresh is annoying to configure even for a human in the user interface. Getting the agent to do this might be a convenient and fast way to set it up and make the multi-step process more understandable. This is especially true when you have complex scenarios like custom hybrid tables, or custom partitions. However, this can also be complex for an agent. In our testing, the agent often got confused once it saw examples or context related to incremental refresh, causing its performance to deteriorate, and it would often implement refresh policies that “broke” the model:

In fact, it’s usually faster, cheaper, and more reliable to get the agent to use the TE2 CLI to test its scripts in a sandbox, and then provide you a re-usable macro that you can use in the TE2 or TE3 GUI. You can see the same process run with an incremental refresh macro below in a fraction of the time, and it’s a lot easier to understand and follow through as a user, too:

TIP

We want to re-emphasize this point: just because you can do something with an agent, doesn’t mean that you should. You aren’t guaranteed to save time or even obtain a good result more conveniently. Favor deterministic approaches where you can, and look for ways to get AI to help you script repeatable patterns so you get more reliable results.

How to get started

This approach is the most flexible, but also the most complex in terms of setup. To use this approach, you need to have Tabular Editor 2 and the Fabric CLI installed, as well as their various pre-requisites. Here’s a full list of what you need:

- Tabular Editor 2 and the Fabric CLI, as well as their dependencies.

- Your model can be in one of the three formats:

- Open in Power BI Desktop.

- Saved in the PBIP format, or its definition in model.bim, TMDL, or database.json.

- Published to a workspace with PPU or Fabric capacity license mode.

- A coding agent that has a Bash or Powershell tool that can execute commands.

- Context files and examples for both Tabular Editor 2 (including C# scripts) and the Fabric CLI (including commands and API endpoints you want to use).

- Ideally, you should also have some way to track and manage changes to your model.

Differences with other approaches

There’s a few key ways in which this approach differs with others. In some ways, it’s quite similar to MCP servers, since it facilitates programmatic interaction with the semantic model. However, it differs in that this code is arbitrary, written and executed by the LLM or as scripts, rather than pre-defined in a set list of tools. The agent is completely dependent on your instructions and examples to be “good” at using the CLI, particularly if it’s not highly present in training data (such as for newer tools).

Benefits

Here’s the key benefits of this approach:

- Flexibility: With this approach, the agent is unrestricted and can make any change that it can specify in code. This opens up a lot more possibilities, even for very niche and uncommon scenarios. Note, however, that it will not know how to do this out-of-the-box, and relies on your instructions and examples to do so… this flexibility also does bring certain risks, too (see challenges, below).

- Scalability: The most interesting benefit is that over time you can improve and extend your agent’s capabilities. The more scripts the agent produces, the more material it can reference for future examples. You can even have the agent convert C# scripts to macros that you can add and use in the Tabular Editor user interface (of TE2 and TE3).

- Change safety: Like MCP servers, you are less likely to “break” models with changes, since unallowed changes will result in errors and refuse the write operation. The errors can also be helpful for the LLM and allow it to improve the script it wrote.

- Good for searching large and complex models: Coding agents that have access to these CLI tools can use them to more easily access certain objects or properties in a semantic model, without having to read entire files or definitions. This can result in many less tokens used over time.

Challenges

Here’s some challenges that are unique to this approach:

- Less reliable without context: You’ll notice that this approach is the “clunkiest” with the most mistakes and issues in the beginning. However, as you continue to expand the context and examples, you’ll get better results. Context from others and improvements to the CLI to make it more “agent-friendly” in the future might help, here. Note however that context can also produce worse results with bad examples or overemphasis.

- Complex setup: It takes more time and effort to setup this approach. You need to include some examples of how to use the CLI and helpful commands, otherwise it can hallucinate.

- Difficult to revert changes: This approach has the same caveat as the MCP server approach. You can’t easily view or undo changes. As such, we recommend that you use CLI tools on model metadata or remote models in isolated workspaces that you’ve configured with Git integration.

- Security risks: Allowing an agent to write and execute arbitrary code can be problematic. When it’s writing C# scripts, for instance, it could theoretically use those scripts to call external APIs or modify local files and systems. To avoid this, you need to diligently manage permissions for agents and only allow them to operate under sufficient human oversight.

When you might use this approach

This approach is useful in two main scenarios:

- When you need flexibility: A key example is when you want to implement a specific but complex change, like incremental refresh.

- When you want to run scripts and macros with deterministic outcomes: For instance, adding date or parameter tables, or common DAX patterns to your model. This is where C# scripts and macros also are helpful in the Tabular Editor GUI.

When you might not use this approach

There’s a few cases when this approach is not ideal:

- Simpler options exist: Keep it simple. If you can do the task with a reliable MCP server tool or modifying the model metadata, then do that.

- You are uncomfortable with the complexity or command-line: If you find the setup of this approach daunting or uncomfortable, or you don’t like using the command-line, then this approach won’t be for you.

- You are on a Mac or Linux (TE2 CLI only): Currently, the Tabular Editor CLI only is supported on Windows. However, the Fabric CLI works very well with agents on Mac or Linux. This means that any agentic development of semantic models on a Linux or Mac machine will be restricted to modification of metadata files.

In summary, this approach affords you the most flexibility, but at the cost of complexity to get it to work the way you want. When you have specific needs that you can’t meet with other approaches and are willing to dive deeper to make it happen, this approach might be most suitable. It’s especially appealing when you consider that agent scripts can be kept and re-used, allowing it to extend and improve its capabilities over time.

In conclusion

If you instruct an agent well, it can use the Tabular Editor 2 CLI to make changes to a model via C# scripts and manage the model via the Fabric CLI. With this approach, the agent can make virtually any change or take any operation on a semantic model. It’s especially useful for deterministic changes and patterns, just like scripts and macros in the Tabular Editor GUI. However, it can’t do so unless you give it good instructions and examples.

This wraps our series of articles discussing the current state of agentic development for semantic models. Each of the three approaches—agents on metadata, MCP servers or CLI tools—each have strengths and weaknesses, but can compliment one another. These approaches can all be a useful and interesting way to augment traditional development workflows, and will likely continue to improve over time as this technology matures.