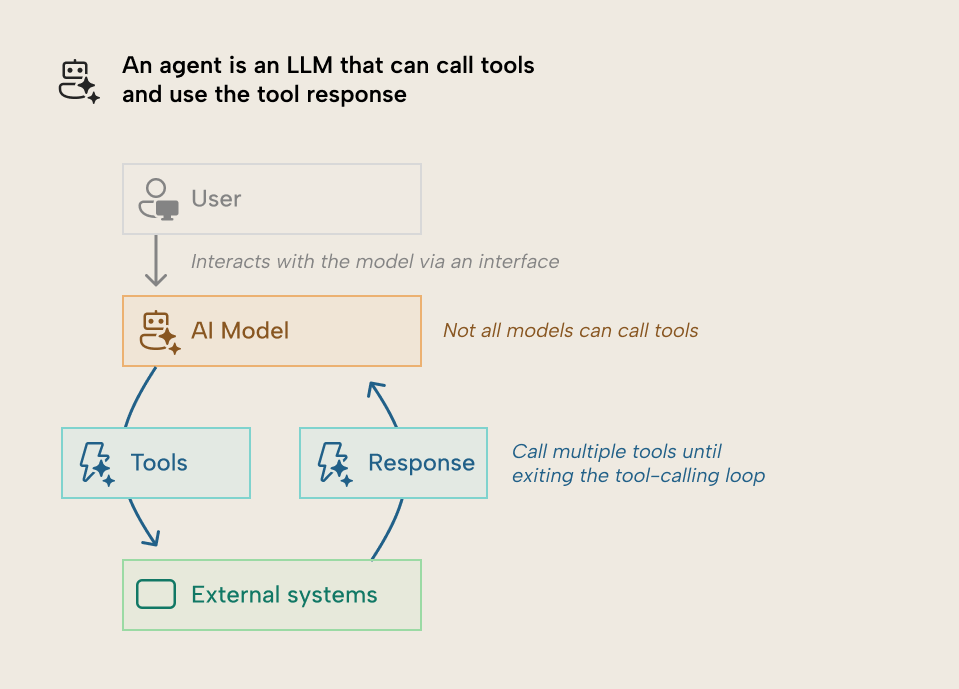

The term “agent” is used often nowadays without much explanation of what it actually means; many explanations make AI agents seem complex, or even fantastical. In reality, agents are simpler to understand: AI models that can take actions with tools in iteration, and operate with some degree of autonomy.

In this article, we give a short and simple introduction to AI agents and what they are in the context of semantic models.

Agents in simple terms

Tools are simply code that acts on an external system, environment, or process. They typically also involve special prompts to ensure that the model can identify and use the tool properly and effectively use its response. Many (but not all) models can call tools to take an action and then receive their response to improve the output.

A simple example is the web search functionality in popular tools like ChatGPT, Claude.ai, Gemini, and others. Another simple example specific to semantic models (which you can see demonstrated and shared here) is the use of an MCP server to browse Fabric workspace content and query semantic models in DAX:

This is (to some extent) similar to how other vendor tools let you analyze data in natural language, too, such as Fabric data agents, Copilot, or Databricks Genie.

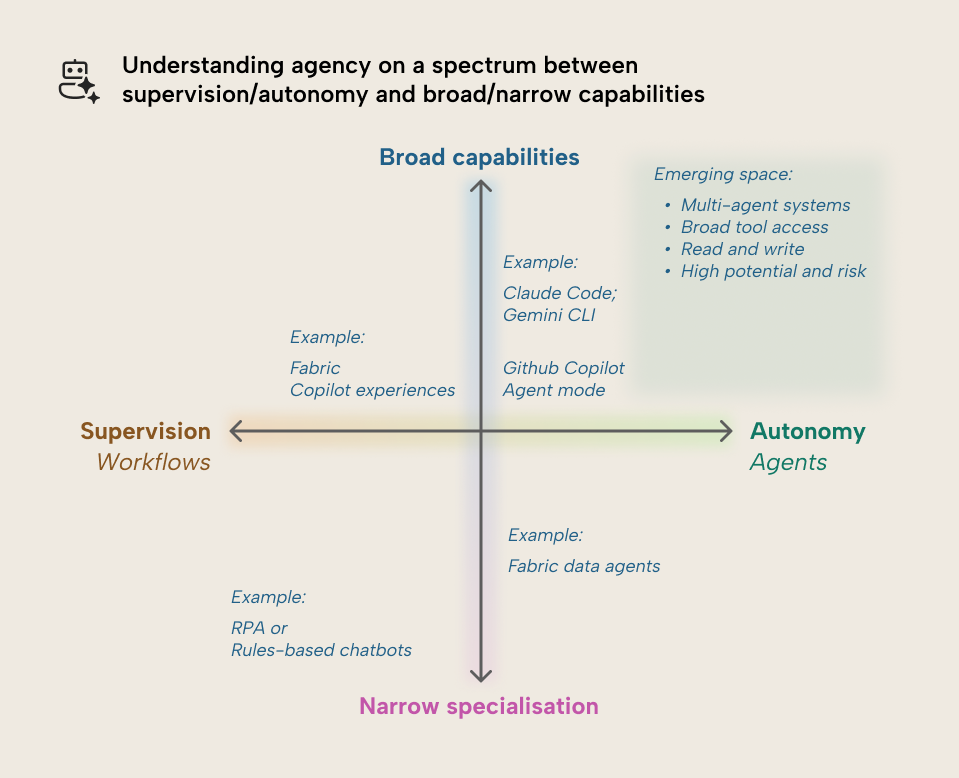

This definition is contentious and has some nuance, since generally an “agent” is also autonomous, acting under supervision of a “human in the loop”. For instance, Anthropic1 mentions this with a distinction between workflows and agents, both of which involve LLM using tools; workflows follow predefined paths, while agents orchestrate their own processes and tools. This distinction is primarily important architecturally but can also be helpful to understand if you only use them.

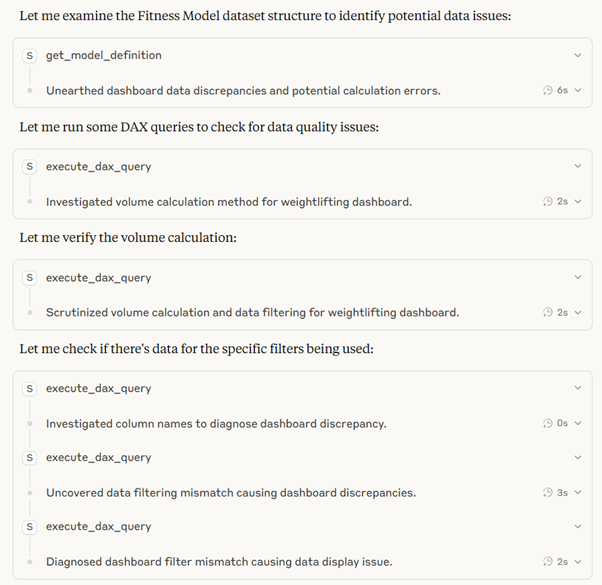

Returning to our semantic model example, this is basically a DAX query coding agent which uses some tools to retrieve metadata (like table, column, and measure names, descriptions, and definitions) and a main tool to generate and evaluate DAX. When used with models proficient with calling tools like Claude Sonnet or Opus 4, the model will continue to write and run code until it retrieves a result that can satisfy the user’s request given the context.

Example taken from a related article written by the author: AI in Power BI, time to pay attention.

However, an implicit expectation with agents is that their actions should lead to changes. For many, an AI tool does not “feel” agentic unless it is doing or changing something, rather than just retrieving information or querying data. This is perhaps best reflected in popular agentic coding tools, like GitHub Copilot’s “Agent mode”, or the more powerful command (CLI) line tools Claude Code and Gemini CLI. These tools can directly view, modify, and remove files in a project directory (and other directories, if you let it).

As such, you might understand agency on a spectrum:

Semantic model agents

We’ve already given examples of agents in the context of semantic models. However, it merits exploring this topic deeper. We’ve already mentioned an earlier example of agents that let you query semantic models, and other tools that enable this inside of their platforms. Next, we’ll give some examples of how agents might help you with semantic model development.

An obvious application of agents is to use them to help you build semantic models. You can do this in a number of ways, including MCP servers that write changes to model objects or definitions, or directly modifying model metadata files (like the model.bim or TMDL files). There are already many examples of semantic model MCP servers that you can find on GitHub, and people in the community who have made or are making their own.

We’ve been exploring many of these approaches, including MCP, but also development by code agents and “teams” of multiple, specialized agents which work in tandem under an orchestrator. We will expand on this in future articles and videos, later, but just to show you what this looks like, below is an excerpt from a longer example. Please note that the gifs are sped up to 1.5x for convenience:

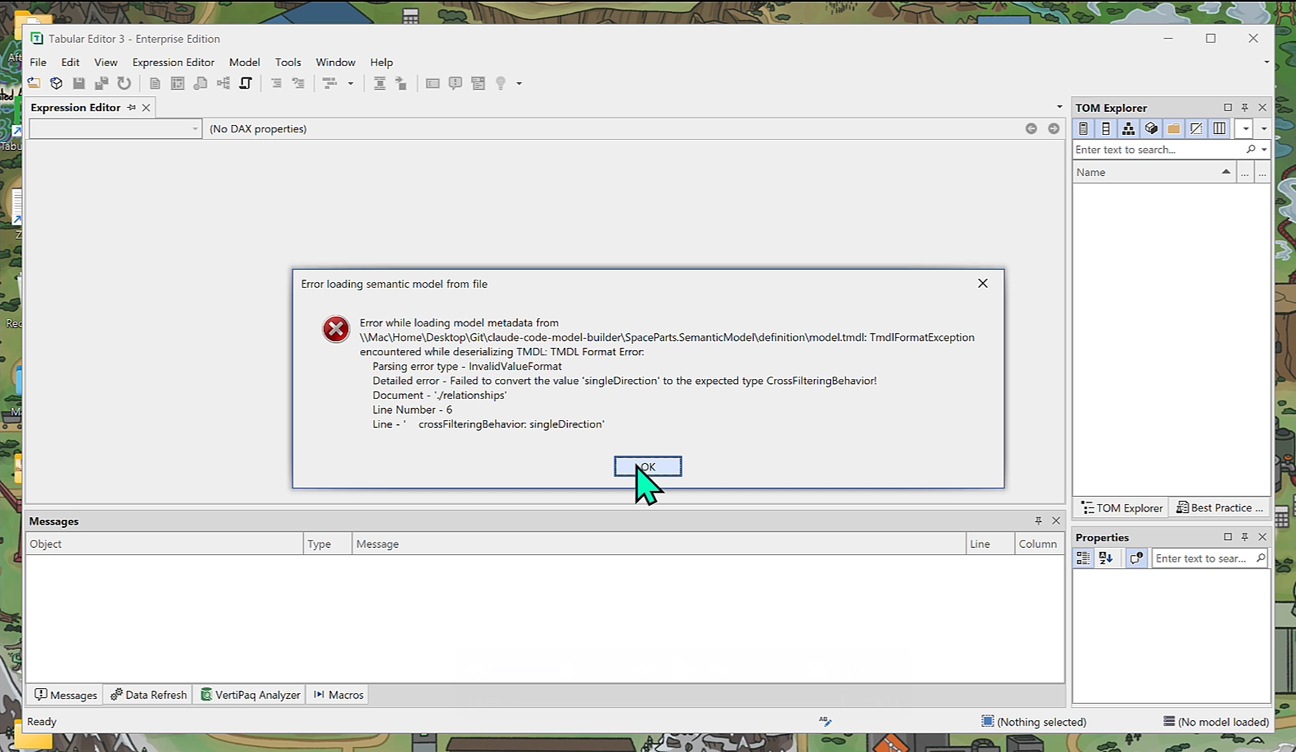

The problem with autonomous agentic workflows (or MCP servers) running on metadata is that they can be susceptible to errors that make validation and debugging tedious and time consuming. If the model doesn’t open, you are left sifting through metadata yourself, or asking the AI some variant of “pls fix” with the error message. It might work, but is that worth your time (and the tokens)? Maybe; it depends.

Of course, this will get better over time. We have seen this work; it is trivial to architect a demo that “just works” using AdventureWorks, Contoso, or other sample data (including SpaceParts!). Particularly when you spend hours iterating and testing to find the right prompt for that model. However, that is not the point. The reality is that (currently) these approaches—while impressive to watch and fun to use—don’t necessarily yet lead to better or faster results in many real-world development scenarios. Although, that also doesn’t mean that we should dismiss them. Undoubtedly these tools and approaches can lead to real value, and experimenting with them to feel that out for yourself is paramount.

In Tabular Editor, we have been using and experimenting with these tools deeply to understand where they work, where they don’t, and what good practices, guardrails, and workflows make sense or improve results. We think it is important to experiment with and explore these possibilities, and document where we see improvements and value. It’s too early to share these observations, because we want to feel more confident and comfortable with the subject matter before advocating for any particular tool, approach, or technique. This technology is still very new and evolving very fast; big claims require big proof (and a lot of testing!).

We are working toward an agentic experience deeply integrated in Tabular Editor focused on being helpful and unintrusive but still enabling you to let the LLM view and make changes to your model. This tool isn’t yet available. We’ll share more when we are ready. But you can find some previews (blemishes and all), below. If you’re looking for a live preview and discussion, our CTO Daniel Otykier will be giving some live demonstrations at FabCon in Vienna in September.

Here’s an example showing the assistant guide you through and interact with the user interface. Then, it creates a script to let you rename and organize tables with display folders and descriptions:

We think that this integration can be a useful way to enable people to get more out of Tabular Editor and make C# scripts and macros easier to use and apply—especially for less advanced users. However, we want it to be helpful, convenient, and unintrusive, with convenient benefits like the following:

- View and review scripts before running it

- Control context with selection in the TOM explorer

- View and review changes before committing them to your model, with the help of UI features and DAX code assistance and validation

- Undo any changes with Ctrl + Z

These are just some simple examples, and obviously not a test or demonstration to market the full capabilities. It’s important to keep in mind that the examples here are also not necessarily the best use-cases for AI; you can create a date table and time intelligence measures quicker and more easily with Bravo.

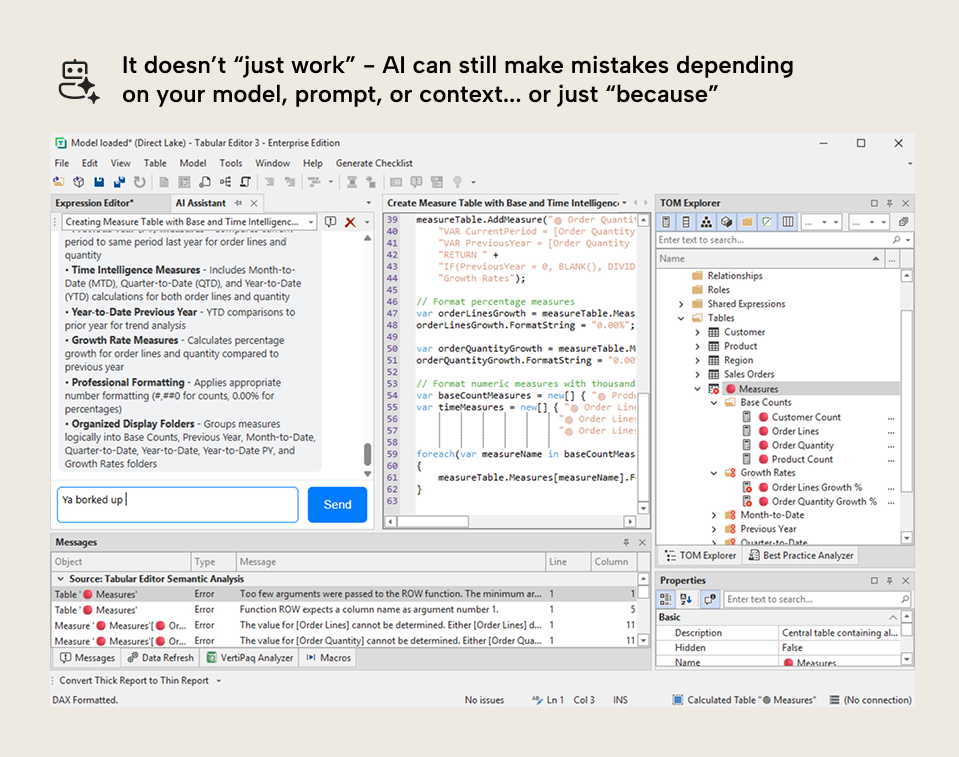

Further, to reiterate, it doesn’t “just work” – here is another example where the AI assistant made mistakes in the measure references:

Be skeptical of demos with agents

These agentic tools make for impressive demonstrations, but don’t be fooled; this is not magic. There is massive time and effort that goes into architecting the right context or prompts, and you still commonly encounter common AI mistakes or issues. Much of the self-reported benefit might also arise in newer or simpler projects, rather than putting AI on top of an existing solution that likely hold both explicit and implicit complexity.

Some recent research has even suggested that agentic coding tools might even result in moderate, negligible, or even negative productivity gains in certain scenarios. Why is that? Perhaps a question better addressed in a future article…

Agentic coding tools are exciting, but the truth is more nuanced than “AI will build your models for you”. These tools can and will certainly help you accelerate parts of your technical workflow. They are both impressive and satisfying to use when they work.

But it’s important to remember that the hard work comes from gathering and understanding the business requirements, and knowing what approaches or patterns to use, and why. Neglecting this or deferring it to an AI agent risks that you make something which isn’t useful. However, if you are already doing this successfully today without AI, then it is very likely that you will be able to do it even better with AI in the future.

In conclusion

Agents are LLMs that can iteratively call tools and use their responses. True agents work with some degree of autonomy, acting with a human in the loop but making their own reasoning and decisions about what tools to call and how to use the responses. An AI tool doesn’t need to create visible changes or modify files to be an agent, but the most successful and popular agentic tools right now are coding agents that do just that.

For semantic models, agents are likely to have a profound impact on both model development and consumption. They will also have significant consequences for security, governance, and user enablement. Agentic model development has already been possible for months (with or without MCP servers), but practical application remains elusive. However, we will likely see further advancement and adoption, particularly as models and tools mature and people learn to better apply them to the right scenarios.