Key Takeaways

- This article is part of a series. Start with part one, here.

- AI applications can use MCP servers with tools to manipulate semantic models. With this approach, the tools provide a programmatic way to read and make changes to the semantic model. This is typically done via the Tabular Object Model (TOM).

- There are multiple Power BI MCP servers available, including one from Microsoft. The Power BI modelling MCP provides many useful tools and resources for agentic development of semantic models. It’s one of the most sophisticated and well-developed MCP servers we’ve used so far (not just for Power BI, but for any area).

- MCP servers are convenient to use and set up. You can use them with any AI application that supports the model context protocol. They contain out-of-the-box context to help the agent more easily find and use tools for the right scenario and prompt. The Power BI modelling MCP server from Microsoft is very well-made with lots of options and guardrails to help you.

- However, MCP servers use a lot of context and tools can be inflexible or opaque. When you use an MCP server, it consumes a lot of your available context window. This is like a budget you have for your chat sessions with AI. When you “spend” too much, you can experience reduced performance and hit limits, faster. Also, you can’t alter the available tools in an MCP server, so they might not fit your scenario or use-case. You need to understand the available tools and what they do to use them well.

- We recommend that you use MCP servers on local model metadata. This helps you more efficiently perform bulk operations, while making it easier to track, manage, and revert changes. Simple changes can still be performed directly on TMDL files by coding agents.

This summary is produced by the author, and not by AI.

Using Power BI MCP servers to modify semantic models

This series teaches you about the different approaches to use AI to facilitate changes to a semantic model using agents. A semantic model agent has tools that let it read, query, and write changes to a semantic model. Using agents this way is called agentic development, and it can be a useful way to augment traditional development tools and workflows in certain scenarios.

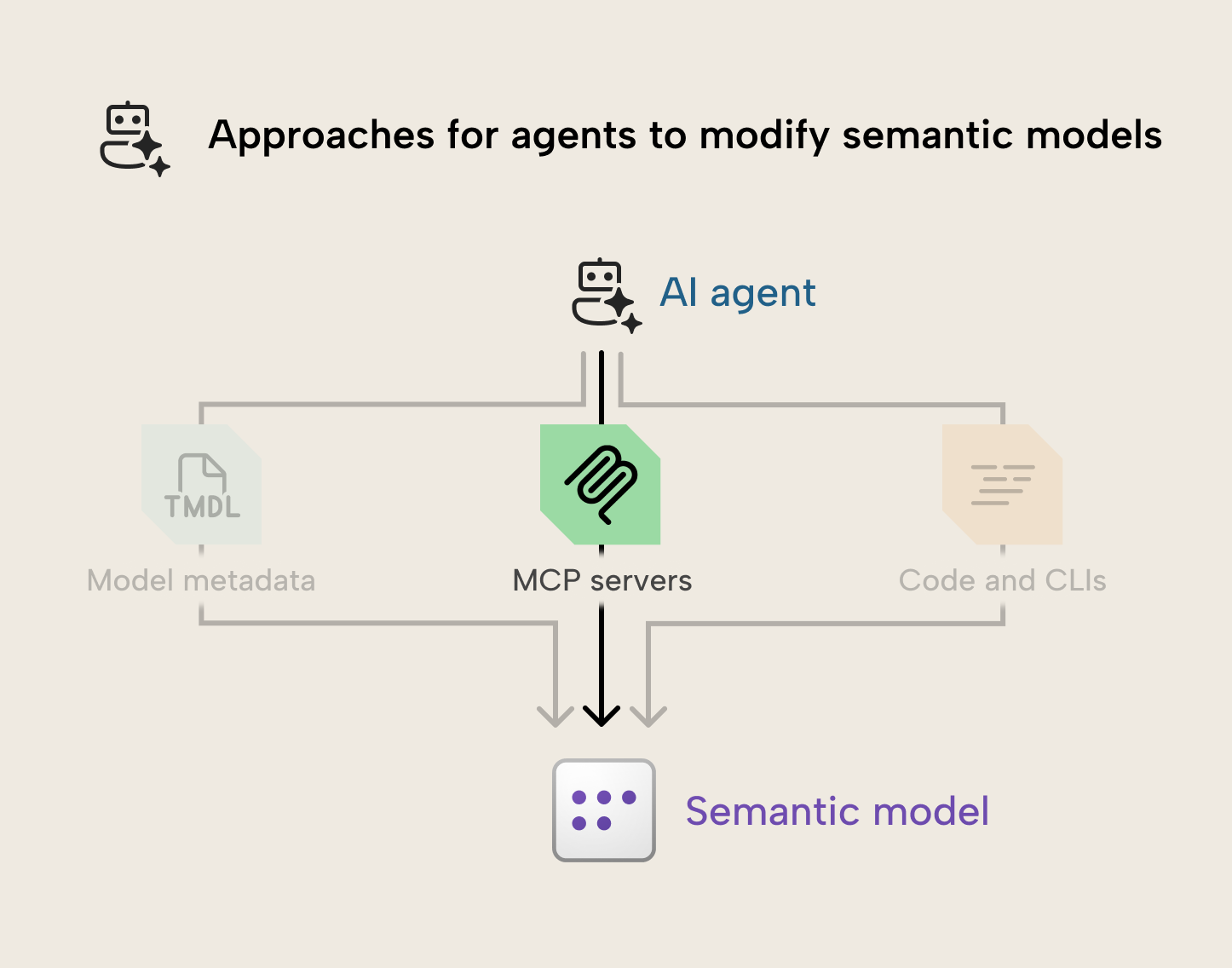

There are several different ways that AI can make changes to a semantic model, including modifying metadata files, using MCP servers, or writing code. Each approach has pros and cons, and if you’ll use AI to make changes to semantic models, then it’s likely that you’ll use all three.

In this article, we discuss MCP servers in detail to facilitate agentic development of Power BI semantic models.

What is an MCP server?

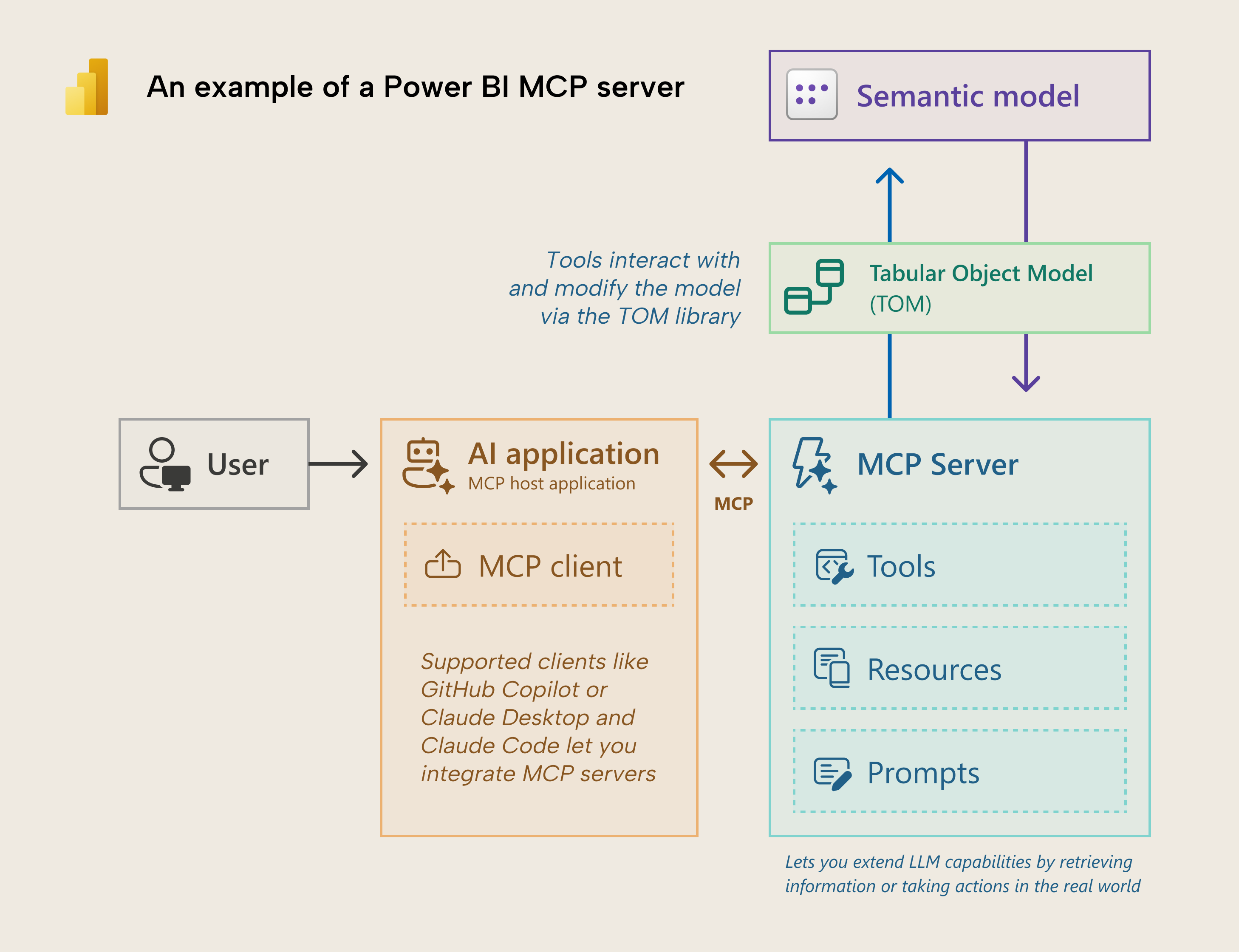

In a previous article we introduced the model context protocol (MCP) and discussed how MCP servers might change BI tools. In summary, MCP servers are a way to extend the capabilities of large language models (LLMs) by providing them with re-usable context (resources), prompts, and tools. Tools give specific functions and code that allow AI to interact with their environment, either to retrieve context or take actions.

In the context of a Power BI semantic model, an MCP server can use the Tabular Object Model (TOM) library to make programmatic changes to the model via the XMLA protocol:

This is the similar to existing approaches with tools like SQL Server Management Studio (SSMS) and external tools like Tabular Editor. What’s different here is that the TOM operations are encoded in tools used by the LLM and not directly used by the user. The tools determine what the AI can do. MCP servers may allow all operations that you could do via a C# script in Tabular Editor or by editing TMDL files by hand. But it may be very constrained. This depends on the individual MCP server and how its tools work.

NOTE

In this article, we focus on the Power BI modelling MCP server from Microsoft, which contains a variety of tools to perform bulk operations on a semantic model, as well as resources to help with less common operations. The MCP server works on local models in Power BI Desktop, published models in a workspace, or local model metadata.

There are already dozens of other impressive MCP servers created by the community that you can find on GitHub, as well, like PowerBI-Desktop-MCP by Maxim Anatsko.

To illustrate, here’s an example of Claude Code using the Power BI modelling MCP server from Microsoft to make changes to a semantic model.

This demonstration shows Claude Code using the MCP server to implement DAX functions that were missing from a model after rebinding a report. The agent uses the MCP server to connect to the local model open in Power BI Desktop, explore it, and add the functions, replacing the DAX. Notice that it's harder to view and follow the changes; we no longer have in-line diffs showing what the agent changed like when the agent works with local metadata files, directly. The agent also gets confused at one point and starts working with the wrong model.

However, using the MCP server is faster and more convenient; any breaking changes are caught and return errors, allowing the agent to fix them and only make valid changes at once. In a few minutes, the agent can make the changes, which the user can then verify in Power BI Desktop or in Tabular Editor.

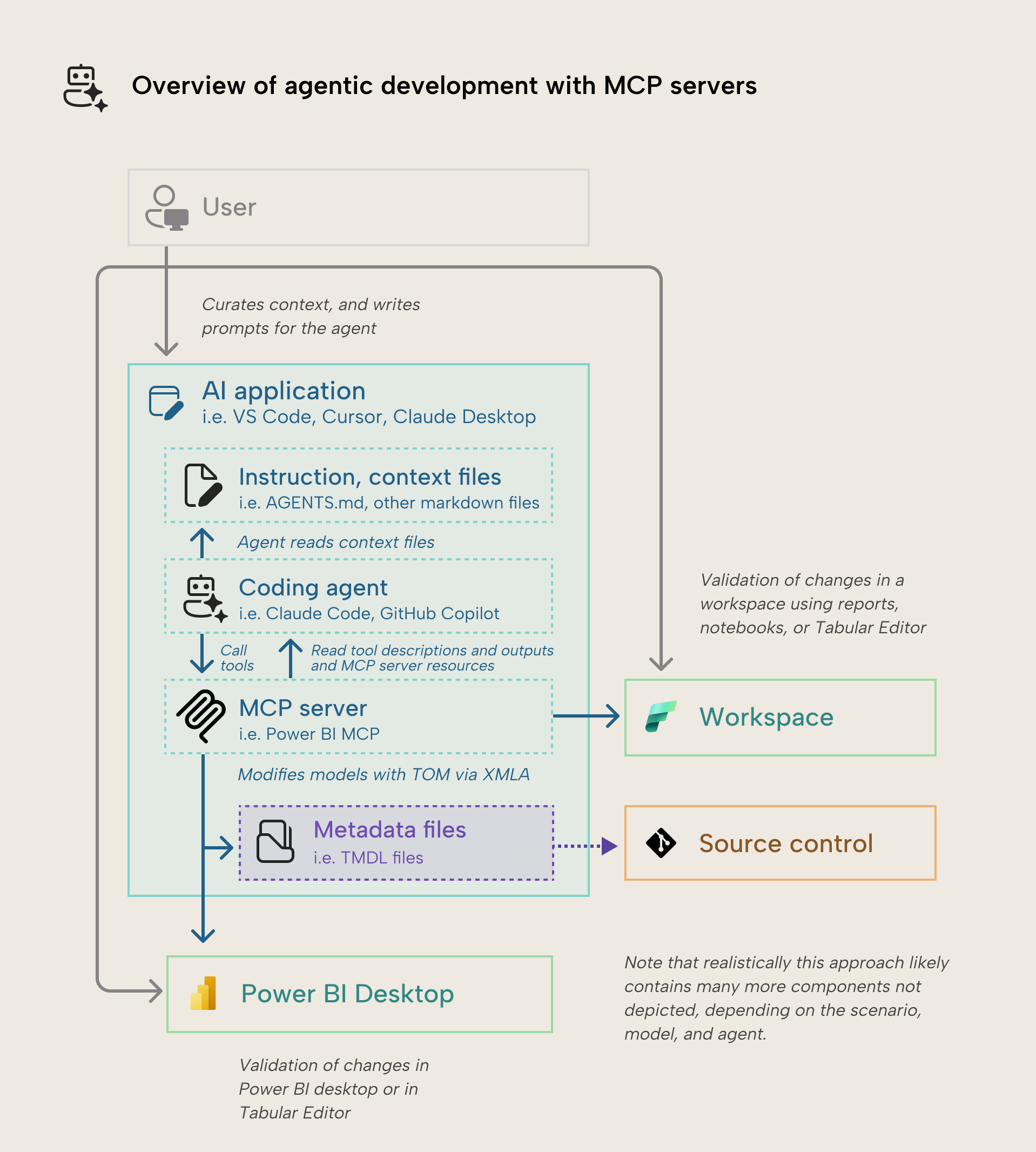

Scenario diagram

The following diagram depicts a simple overview of how you can use MCP servers to facilitate agentic development of a semantic model:

Note that this diagram depicts a somewhat simplified scenario. In a real implementation, you’re likely to use more components and tools, both in a traditional and AI-sense. You can understand this process as follows:

- The user has their model either open in Power BI Desktop or published to a workspace. They might also have the model saved as a PBIP to view and work on the model metadata. The approach depends on user preference and the specific MCP server.

- The user curates a base set of instructions (such as AGENTS.md or CLAUDE.md) and context (other instruction files; typically, markdown) about the TMDL format and the semantic model that they want to change. They optionally also configure other agentic tools and components, depending on the coding agent that they use, like the Microsoft Docs remote MCP server or a Claude Code skill. Setting up these instructions and context is not a one-off activity and requires active curation and maintenance; it’s more of a communication and writing skill than a technical skill.

- The user creates a prompt or drafts a plan with the coding agent. This is best done as an iterative exercise with the coding agent before it makes any changes. Context, instructions, and prompts aren’t generated by AI or created by agents, as this may lead to degraded performance.

- The user submits the prompt to start a session.

- The agent ingests its instructions, as well as tool descriptions and resources from configured MCP servers. This information enters the context window, which is the finite budget of tokens the agent can use per session.

- The agent can use built-in or MCP server tools to read other instructions or retrieve information about the semantic model (once connected).

- The agent makes changes to the semantic model, either in Power BI Desktop or in a published model in the Power BI service.

- The user validates those changes in Power BI Desktop or other tools.

WARNING

With MCP servers or with code, it’s more difficult to have visibility on and undo changes to your semantic model.

As such, you need to make sure that you have a way to save, view, and commit changes to a remote repository. We recommend that you save models as PBIP files for this and use the TMDL model metadata format.

Demonstrations: what this looks like

Here are a few examples of agents working with MCP servers on a semantic model.

Example with GitHub Copilot

GitHub Copilot in agent mode comes with extensive support for MCP servers and customization. The Power BI MCP comes pre-packaged with a VS Code extension that helps you to manage it.

The following example shows GitHub Copilot adding descriptions, format strings, and display folders to multiple measures in a model. Note that here we are working with Power BI Desktop:

WARNING

Many VS Code extensions have silently and automatically added MCP servers and extension tools. You should regularly check which of these are enabled when you use an agent like GitHub Copilot in VS Code. These tools and MCP servers can consume your available context, resulting in shorter sessions and reduced agent performance.

Always disable the tools that you don’t need for a session or project. For more information, see this documentation article.

Example with Claude Desktop

Another popular tool that supports MCP servers is Claude Desktop. Claude Desktop has a leaner, simpler user interface compared to VS Code and excels at providing ad hoc visuals or diagrams of various concepts.

To configure the MCP server in Claude Desktop, you need to download the VS Code extension .vsix file and extract the executable (.exe). Then, you have to provide more detailed configuration either in the settings JSON, or by creating a custom .mcpb or .dxt file.

The following is an example of Claude Desktop using the MCP server to depict relationships between fields in a rather sloppy diagram:

Note that Claude Desktop can create artifacts which render code into images in the application. Claude Desktop is good for exploratory tasks or model consumption and analysis, but not as much for development. Usually it also performs better with these types of diagrams if you give it better context and examples of what you expect in terms of the aesthetics and design. For development, we recommend that you use GitHub Copilot in VS Code or Claude Code in a terminal.

Example with Claude Code

Claude Code has a user interface, but you can also use it from within the terminal.

Adding this MCP server to Claude Code is a bit more complex, as it requires some specific configuration. You can copy the command below to do this, which you need to run in Powershell or Bash, replacing the path with the correct path to the powerbi-modeling-mcp.

claude mcp add --transport stdio powerbi-modeling-mcp --env PBI_MODELING_MCP_CLIENT_ID=ea0616ba-638b-4df5-95b9-636659ae5121 -- "C:\pathto\powerbi-modeling-mcp.exe" --startThe following is an example of Claude Code using the semantic model MCP server. Here, the user asks Claude Code to add and make some changes to DAX measures:

In this example, you can see that moving measures between tables can be destructive; it results in an error in a visual. You can also see that the agent makes certain DAX assumptions based on existing patterns in the model; you can't rely on it to write "good" DAX or Power Query unless you provide sufficient instructions or examples in your prompt or context. This is also an example of a bad use-case for agentic development; it would've been faster, cheaper, and probably easier to make and adjust the measures in Power BI Desktop or Tabular Editor.

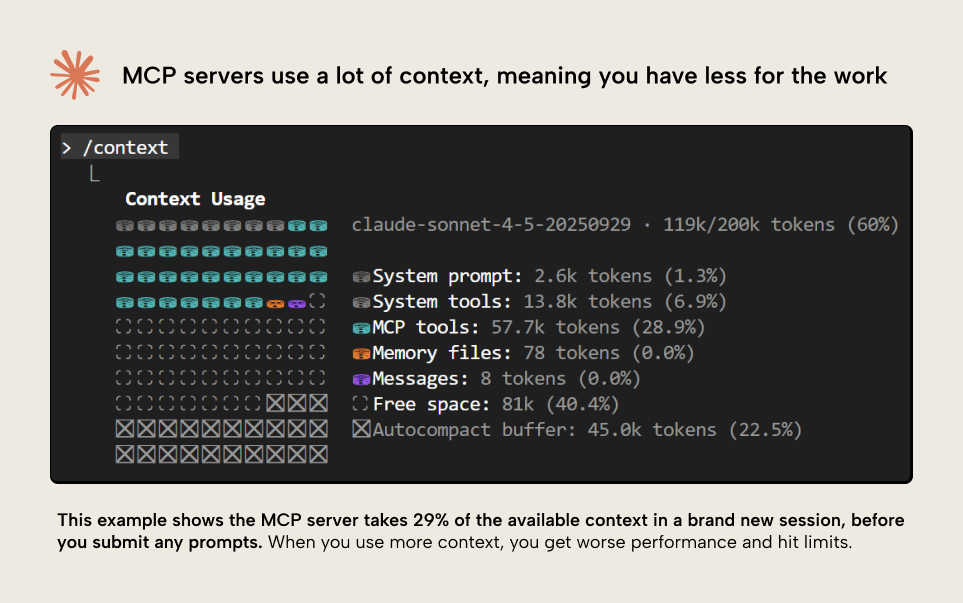

As you saw in the demonstration, Claude Code is especially useful since it gives you a visual breakdown of what's happening with your context window. In the following diagram, you can see that 60% of the context is already consumed in a brand new session without any prompts. 29% of the context is taken up by the Power BI modelling MCP server, alone:

This is one of the biggest challenges with MCP servers, so we highlight it already, here. Later in the article, we'll explain in a bit more detail why this is challenging.

How to get started

To use this approach, you need to have an MCP server that supports semantic modelling operations like the Power BI MCP server. You also need an AI application that supports the model context protocol (an MCP client, like those shown above). Here’s a full list:

- Your model can be in one of the three formats:

- Open in Power BI Desktop.

- Saved in the PBIP format or saved as local metadata files.

- Published to a Power BI workspace. Note that here you might need a Power BI Pro license, or a supported workspace license mode for XMLA endpoint, depending on the MCP server that you’re using.

- A coding agent that you can use like GitHub Copilot in agent mode, Claude Code, or Gemini CLI. Most coding agents require a paid subscription for their use. We recommend Claude Code, which we feel has the best features and developer experience for agentic development in Microsoft Fabric, Power BI, and just overall.

- Ideally, you should also have some way to track and manage changes to your model. For a basic introduction to this topic, see this article from the Microsoft documentation.

Differences with other approaches

Agents that use MCP servers differ from direct metadata modifications or use of CLIs and scripts in several ways, which we discuss below.

Benefits

Here’s a few of the unique benefits of this approach:

- Tools for agents: MCP servers excel at providing agents with tools and a way to tell them when and how to use them. Well-designed tools allow users to “plug-and-play” much more easily, and users don’t have to invest as much in context or instruction files to get agents to use the right tools in the right scenarios. For semantic models, this is useful for the agent to know when to use a tool to modify measures and when to query a model, depending on the prompt and context.

- Portable context and prompts: In addition to tools, MCP servers can also provide resources and prompts. Resources are basically context files which the agent can automatically read and use, while prompts are re-usable prompts for certain repeatable tasks. For semantic models, this is especially useful with newer DAX functionality like functions and custom calendars.

- Less hallucinations: Unlike modifying model metadata directly, this approach can only allow supported changes via the TOM. This does depend on the available tools and how they are designed, though, but it helps to ensure that the agent only makes valid changes to a semantic model, and reduces the likelihood and potential impact of hallucinations.

- Relatively easy to set up and use: MCP servers are designed for portability, so you can easily set them up to use with your tool or model of choice. This is especially useful if you work on local models (via applications like LMStudio) for enhanced security or privacy.

In summary, MCP servers are a useful way to give agents tools with straightforward setup. They’re hard to design well, but when they are, they can be very powerful and convenient. However, they’re not without their challenges, too.

Challenges

Here are a few caveats and considerations for using MCP servers both with semantic models in Power BI and in general:

- Visibility on and reverting changes: Unlike when you make changes to model metadata, directly, with MCP servers you can’t easily see what’s been changed. You can only cross-reference the agent’s output with what happens in Power BI or Tabular Editor. Furthermore, you can’t undo or revert your changes. If you don’t set up source control, you risk losing substantial time when (not if) your agent goes “off the rails”. This is especially problematic when you first start using the MCP server or using it with a new model, and don’t yet have good instructions or prompts set up.

- Possible opaque or rigid tool design: When you use an MCP server, you’re constrained to how the developer designed the tools. That means that tool descriptions, code, or responses might be suboptimal for your scenario, or don’t support it at all. Unless the MCP server is open source, you likely can’t even see or modify these tools, either. This can be especially challenging when you have a unique configuration, design, or even storage mode like composite models. In this scenario, you should work on the model metadata directly or use the Tabular Editor 2 CLI.

- Context bloat: A known problem with MCP servers is that they fill up the context window of your session. The more context you use, the worse an agent performs at a given task. It also leads to sessions hitting their limits too abruptly. This is especially problematic for large “monolithic” MCP servers that have too broad a scope, and are better broken up into modular, scenario-specific components to use when necessary.

- They still require instructions: MCP servers are so convenient because they provide a lot of context out-of-the-box about how and when to use tools. However, that doesn’t mean that the agent will use these tools correctly or effectively. For instance, if you ask the agent to parameterize connection strings in Power Query, it might have tools to create shared expressions or modify M partitions, but that doesn’t mean it will use know how to write M code in the patterns you want or need to use. To do this, you have to provide specific instructions about what to change and how to change it. This is especially important when it comes to changing DAX and Power Query expressions.

- Security challenges: MCP servers are a new and evolving technology. There are many security concerns or vulnerabilities that aren’t yet well-addressed or understood.

As you can see, the tax you pay for convenient use of MCP servers comes with other inconveniences and considerations, too.

TIP

In our experience, the Power BI modelling MCP server from Microsoft performs very well. Despite using a lot of your available context, this is a very well-made tool to facilitate agentic development.

When you might use this approach

MCP servers are suitable over alternative approaches for agentic development in the following scenarios:

- Bulk changes and refactoring: MCP server tools like those in the Power BI MCP provide an elegant way to make multiple changes at once. Set multiple properties, or create multiple measures; these kinds of operations are easy to set up and use. This is particularly useful when you want to create new perspectives and translations for your model.

- Tools provide useful, unique functionality: MCP servers work best when they solve specific problems. Small, elegant MCP servers that help you tackle scenarios with specialized tools can be very beneficial.

- You can’t or don’t want to use a command-line agent: Agents that live in the command line work best with other CLI tools and APIs. However, if you are only using tools with a user interface like GitHub Copilot in agent mode or Claude Desktop, then you might find the MCP server delivers a better user experience.

- Most generic scenarios: The powerbi-modelling-mcp is currently the best tool to use for agentic development of semantic models in typical scenarios. If you have more specific needs or requirements, you can complement it with other tools.

When you might not use this approach

There are a few cases when you might consider alternatives over MCP servers.

- Tools don’t match your scenario or use-case: As we mentioned above, MCP server tools can be rigid or inflexible and might not fit what you need. If you need more flexibility, you might better work directly with model metadata or using programmatic interfaces.

- Single or one-off changes: It’s usually faster and cheaper to make these changes with traditional tools, or by getting the agent to work directly on the model metadata.

- Repetitive and deterministic changes that are scriptable: When you use AI with model metadata or MCP servers, you will get different results with the same prompt. If you want the same result each time, it’s better to get the AI to write and execute a script. An example is creating time intelligence measures, a date table, or applying common DAX and data model patterns.

- CI/CD pipelines: MCP servers add unnecessary complexity and security concerns. If you’re considering using asynchronous agents as part of CI/CD pipelines, have them directly read and work with metadata. Don’t use agents at all if you don’t need them. In general, CI/CD is about reproducibility; LLMs are inherently not very reproducible…

- You are on a Mac or Linux: Currently, the libraries that allow programmatic interaction with the semantic model have Windows dependencies that prevent them from working on a Mac or Linux machine. This might change in the future, though.

In summary, when to use or not use an MCP server really depends on your specific scenario. You need to understand the available tools, how they work, and what their limitations are.

Tips for success with agents using MCP servers

MCP servers can be really powerful when you use them correctly. Here are a few tips from our own experience, but a lot of our tips from the previous article still apply (like curating context, combining with other approaches, and so forth):

- Save models in PBIP format and regularly commit changes: This is true with any approach, but especially so here, so that you can easily view and revert your changes. If you use the MCP server directly on Power BI Desktop, remember to save Power BI Desktop to have changes appear in the model metadata.

- Only enable them when you need them: As we mention above, MCP servers take up a lot of the context window. Be very restrictive of what servers and tools you have active in each session to avoid hitting limits or decaying performance.

- Examine tool inputs and responses: When you first use an MCP server, make sure you check what it’s receiving as an input, and what it provides as a response. Understanding MCP server tools is imperative to making sure that you use them well, and that you don’t misunderstand their capabilities, falling victim to LLM hallucinations. A specific example of this is if you ask the MCP server to create a field parameter; if it doesn’t know what a field parameter is, it might just create a calculated table with the incorrect configuration.

- Iterate and improve context: Like with all approaches, MCP servers get better as you improve context. When you notice the agent using tools in the wrong circumstance or providing inputs you don’t like, you can improve instructions to get better results.

In summary, MCP servers are a convenient tool for agentic development, but you should use them situationally and carefully. Only use MCP servers from trusted authors and ensure that you know what the tools do before you use them.

In conclusion

MCP servers provide a valuable and interesting way for AI to modify semantic models. The biggest strength of an MCP server is in its portability and how it can make the model aware of the tools and instruct the model when to use them. However, MCP servers also have some big weaknesses in how much context they use and tool limitations. Nonetheless, when you understand the tools and apply them in the right scenarios, MCP servers can be a really powerful way to accelerate development in certain scenarios, especially the Power BI modelling MCP from Microsoft.

The next article in this series discusses the third and final approach, where agents interface with models programmatically. This approach is similar to MCP servers, except that the model doesn’t use pre-defined tools. Rather, it writes and executes code, arbitrarily. This approach is maximally flexible but can come with additional risks and need for context.